Code release for Proto-CLIP [ Arxiv | Project-Page ]

- To download the datasets, please follow the details in DATASET.md.

- To download the FewSOL dataset variants [52 | 198], please use this link.

- Note : Please make sure to place all the datasets in

DATA/directory.

# create conda environment

conda create -n proto-clip python=3.9

# activate the environment

conda activate proto-clip

# install dependencies

pip install -r requirements.txt

# Install the according versions of torch and torchvision

conda install pytorch torchvision torchaudio pytorch-cuda=11.7 -c pytorch -c nvidia-

Adapter Adapter-Alias 3xConv conv-3x 2xConv conv-2x MLP fc -

For details about adapter aliases, please check the supplementary material.

-

For dataset aliases, please check datasets/__init__.py

CUDA_VISIBLE_DEVICES=<GPU_ID> \

python main.py \

--config <configs-file> \

--dataset <dataset-alias> \

--logs tb_logs \

--alpha <alpha> \

--beta <beta> \

--adapter <adapter-alias> \

<vl-flag> \

<test-flag>config-file: Configuration file path for the experiment. Default config files are inconfigs/directory.dataset-alias: Alias of the dataset to be used for the experimentalpha: alpha hyperparameter for the selected datasetbeta: beta hyperparameter for the selected datasetadapter-alias: adapter alias for the experimentvl-flag: To train text memory use""else"--train_vis_memory_only"test-flag: To train/test use""/"--only_test".

Note: Please use main.qt.py for experiments involving Proto-CLIP-F-QT.

tensorboard --logdir tb_logs

Demo: User command oriented (Fetch) robot grasping using Proto-CLIP predictions.

For the real world demo, please use proto-clip-toolkit (sample codes). Please check the pypi package here.

Please check the pretrained checkpoints to use/work with the proto-clip-toolkit.

NOTE: Use appropriate dataset w.r.t. the checkpoint.

- Project Page

- Please check the FAQs here

- Real World Demo | Playlist

- Results for Joint Object Segmentation and Few-Shot Classification in the Real World

- CLIP vs Proto-CLIP t-SNE visualization

- Barnes-Hut t-SNE visualization using Proto-CLIP-F trained on FewSOL [198 classes] dataset

Following 3 options are available for any clarification, comments or suggestions

- Join the discussion forum.

- Inform an issue.

- Contact Jishnu.

Please cite Proto-CLIP if it helps your research:

@article{padalunkal2023protoclip,

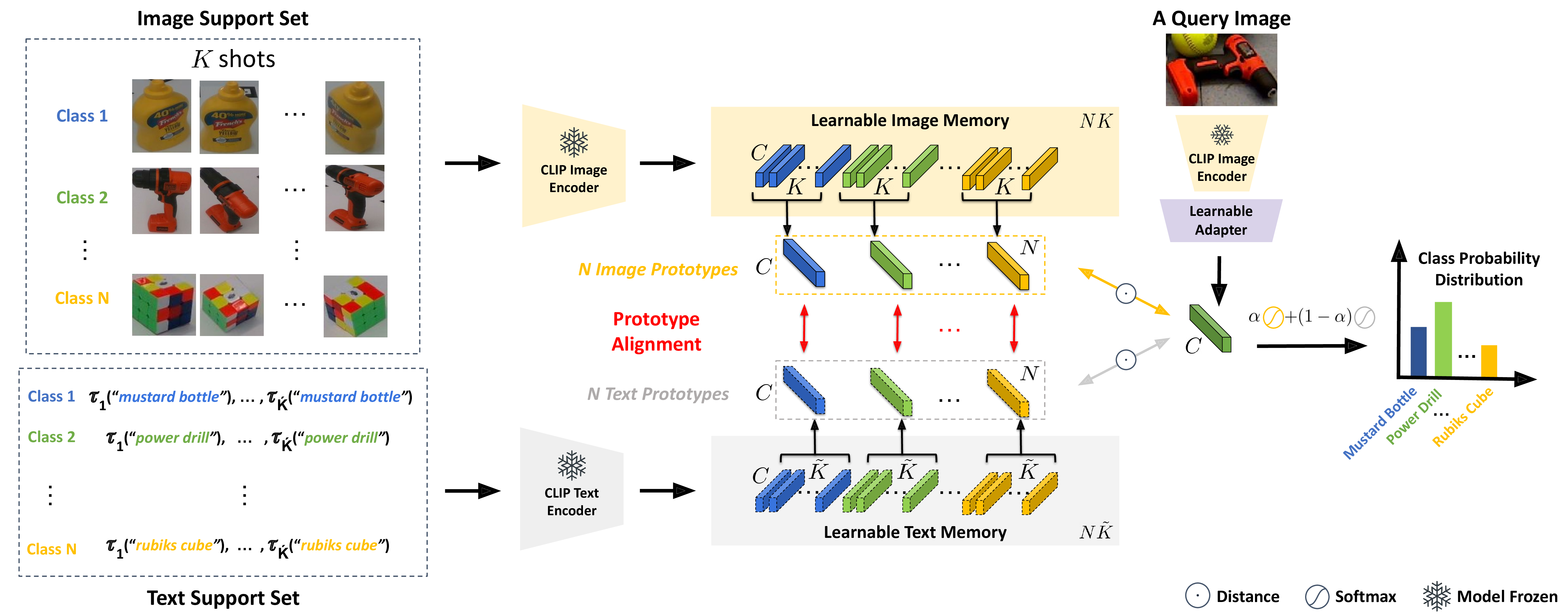

title={Proto-CLIP: Vision-Language Prototypical Network for Few-Shot Learning},

author={Jishnu Jaykumar P and Kamalesh Palanisamy and Yu-Wei Chao and Xinya Du and Yu Xiang},

archivePrefix={arXiv},

eprint={2307.03073},

year={2023}

}