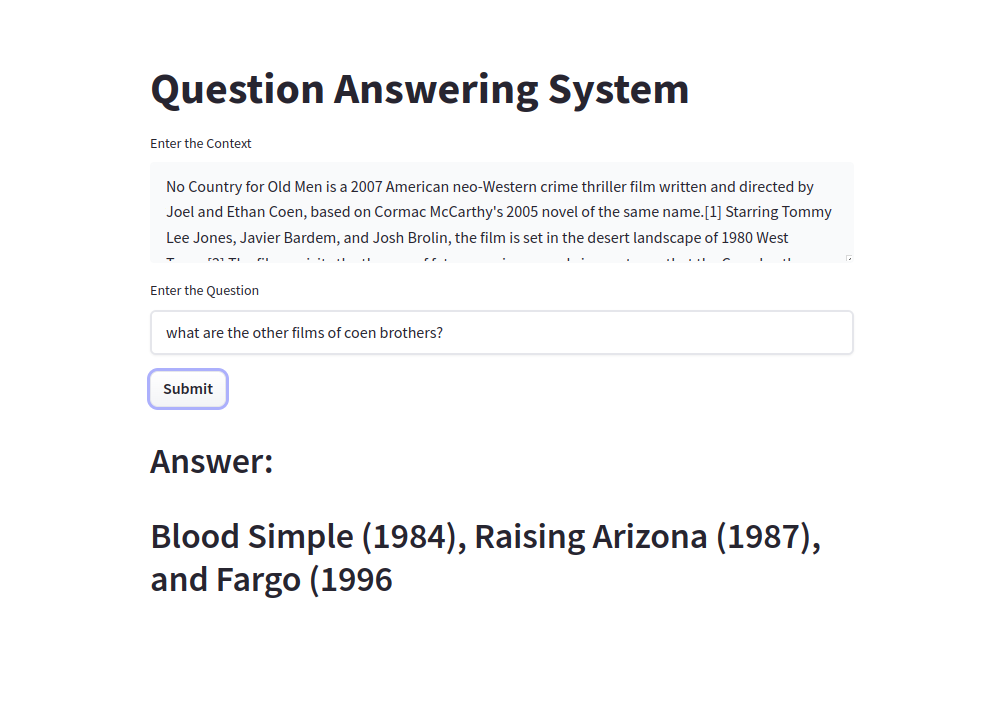

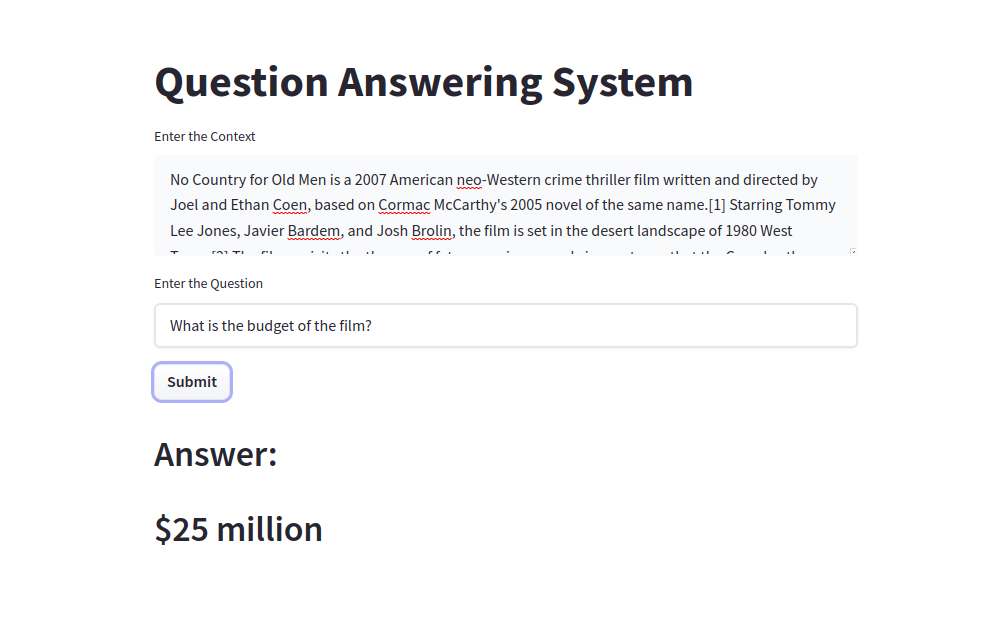

The Question Answering System is a web app which answers the questions based on the context given in the text area. The model is based on the RoBERTa transformer, which is a Transformer model. Also fine tuned using SQuAD[Stanford Question Answering Dataset] dataset with the help of Haystack library, which is an open source NLP framework that leverages pre-trained Transformer models. Used Streamlit to make the web app and deployed in the Hugging Face Spaces.

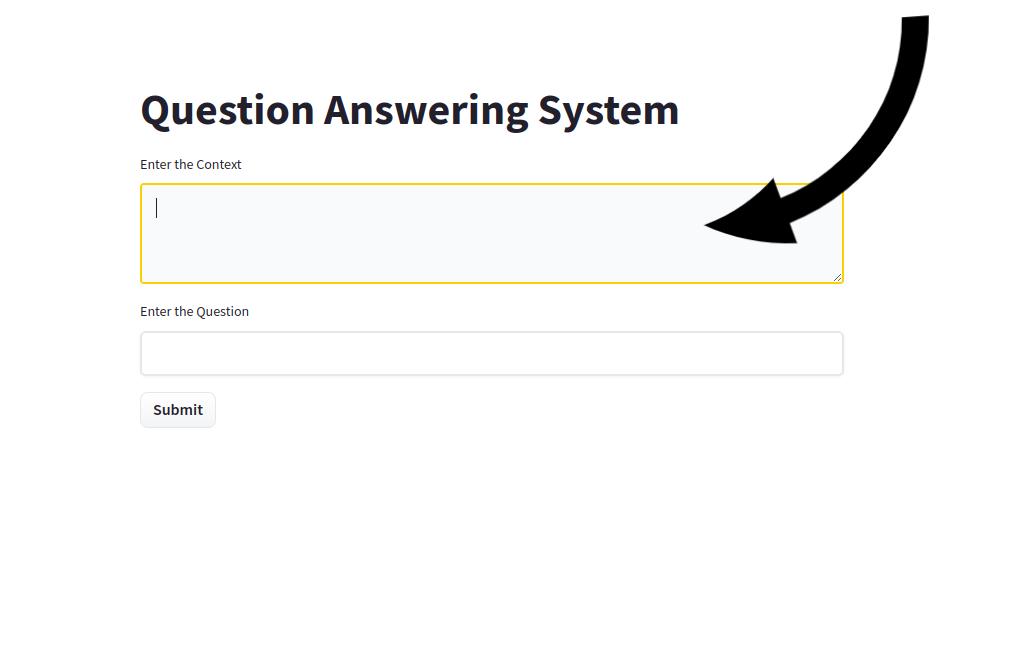

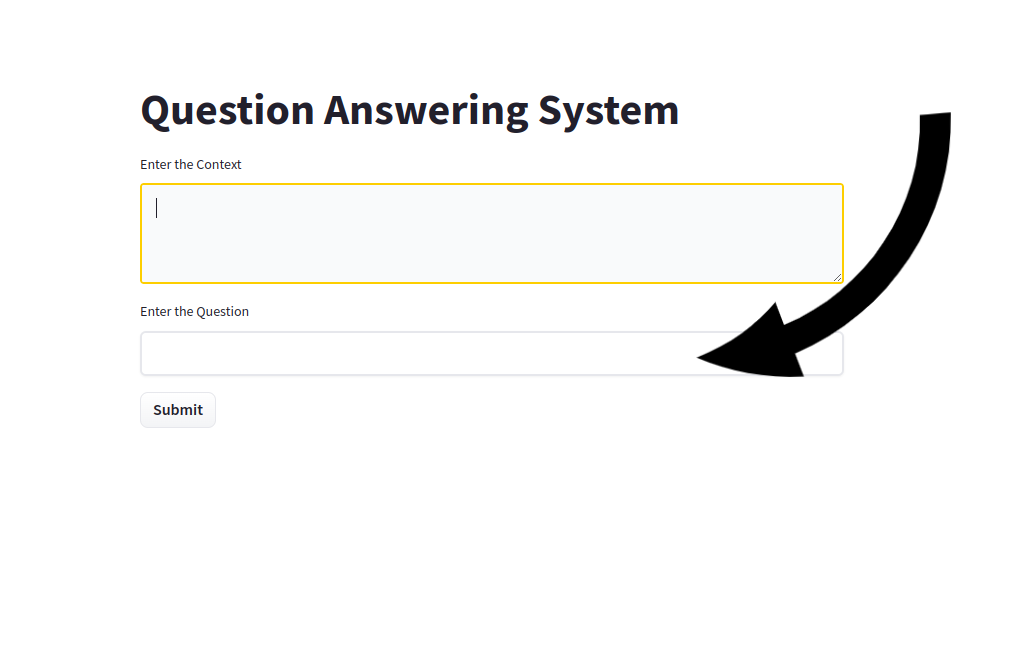

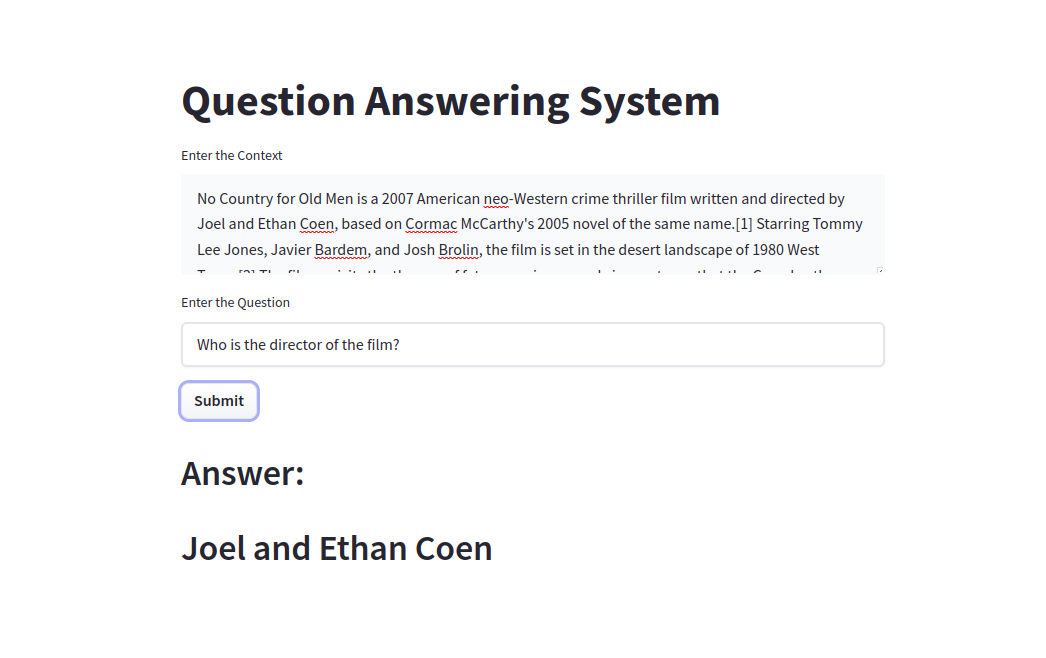

The web app contains two boxes of context and question. And a Submit button. User should paste the context before asking the question.

RoBERTa is a transformer model pretrained on a large corpus of English data in a self-supervised fashion. RoBERTa builds on BERT's language masking strategy, wherein the system learns to predict intentionally hidden sections of text within otherwise unannotated language examples. BERT uses static masking i.e. the same part of the sentence is masked in each Epoch. In contrast, RoBERTa uses dynamic masking, wherein for different Epochs different part of the sentences are masked. This makes the model more robust.

Haystack is a python framework for developing End to End question answering systems. It provides a flexible way to use the latest NLP models to solve several QA tasks in real-world settings with huge data collections. Haystack is useful for providing solutions to diverse QA tasks such as Financial Governance, Knowledge Base Search, Competitive intelligence etc. Haystack is available as an open-source library with an extended paid enterprise version with all the bells and whistles.

Project Roadmap

- Fine tuning the RoBERTa transformer model using haystack.

- Importing FARMReader class from Haystack.

- Downloading RoBERTa model from HUgging Face.

- By using the SQuAD dataset training the model for 5 epochs.

- Saving the model for our web app.

- By using Streamlit making the web app.

- Deploying the web app in Hugging Face Spaces.