Training segmentation networks, which paint each pixel of the image a different color, based on its class and use the segmented images to find free space on the road.

Labelling the pixels of a road in images using a Fully Convolutional Network (FCN) with the FCN-8 architecture developed at Berkeley based on Long et al.: Fully Convolutional Networks for Semantic Segmentation.

Pre-trained VGG-16 is converted to a FCN by converting the fully connected layer to 1x1 convolution and setting the depth of layer to the number of classes (i.e 2 for road and non-road). The performance of the network is enhanced by the skip connections through performing 1x1 convolutions on previous VGG-16 layers (i.e layer 3 and 4) and adding them element-wise to upsampled lower-level layers (i.e 1x1 convolved layer 7 is upsampled, then added to 1x1 convolved layer 4 and repeat for layer 4 and layer 3).

- The loss function used is cross entropy

- AdamOptimizer is used for optimization

The hyperparameters for the training

- epochs : 50

- batch_size : 5

- keep_prob : 0.5

- learning_rate : 0.0001

- Average loss per batch is below 0.2 after 2 epoches

- Average loss per batch is below 0.1 after 9 epoches

- Average loss per batch is below 0.05 after 20 epoches

- Average loss per batch is below 0.039 after 30 epoches

- Average loss per batch is below 0.031 after 40 epoches

- Average loss per batch is below 0.022 at 50 epoches

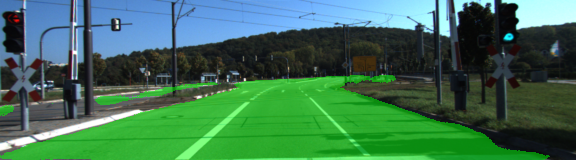

Sample output images for road classification with the segmentation class overlaid upon the input images in green.

Make sure you have the following is installed:

Download the Kitti Road dataset from here. Extract the dataset in the data folder. This will create the folder data_road with all the training a test images.

Implement the code in the main.py module indicated by the "TODO" comments.

The comments indicated with "OPTIONAL" tag are not required to complete.

Run the following command to run the project:

python main.py

Note If running this in Jupyter Notebook system messages, such as those regarding test status, may appear in the terminal rather than the notebook.

- Ensure you've passed all the unit tests.

- Ensure you pass all points on the rubric.

- Submit the following in a zip file.

helper.pymain.pyproject_tests.py- Newest inference images from

runsfolder

A well written README file can enhance your project and portfolio. Develop your abilities to create professional README files by completing this free course.