PyHook is an MIT licensed software, written in Python and C++, for access and modification of ReShade back buffer.

PyHook consists of two elements:

- Python code that finds process with ReShade loaded, injects addon into itand allows to process frames in code via dynamic pipelines.

- C++ addon DLL written using ReShade API that exposes back buffer via shared memoryand allows to configure dynamic pipelines in ReShade Addon UI.

Table of Contents

- Graphical user interface that allows to manage multiple PyHook sessions

- Automatic ReShade detection and DLL validation

- Automatic addon DLL injection

- Shared memory as storage

- Dynamic pipelines ordering and settings in ReShade UI via addon

- Pipeline lifecycle support (load/process/unload)

- Pipeline settings callbacks (before and after change)

- Pipeline multi-pass - process frame multiple times in single pipeline

- Frame processing in Python via

numpyarray - JSON file with pipelines settings

- Local Python environment usage in pipeline code

- Automatic update

- Automatic pipeline files download

- Automatic requirements installation

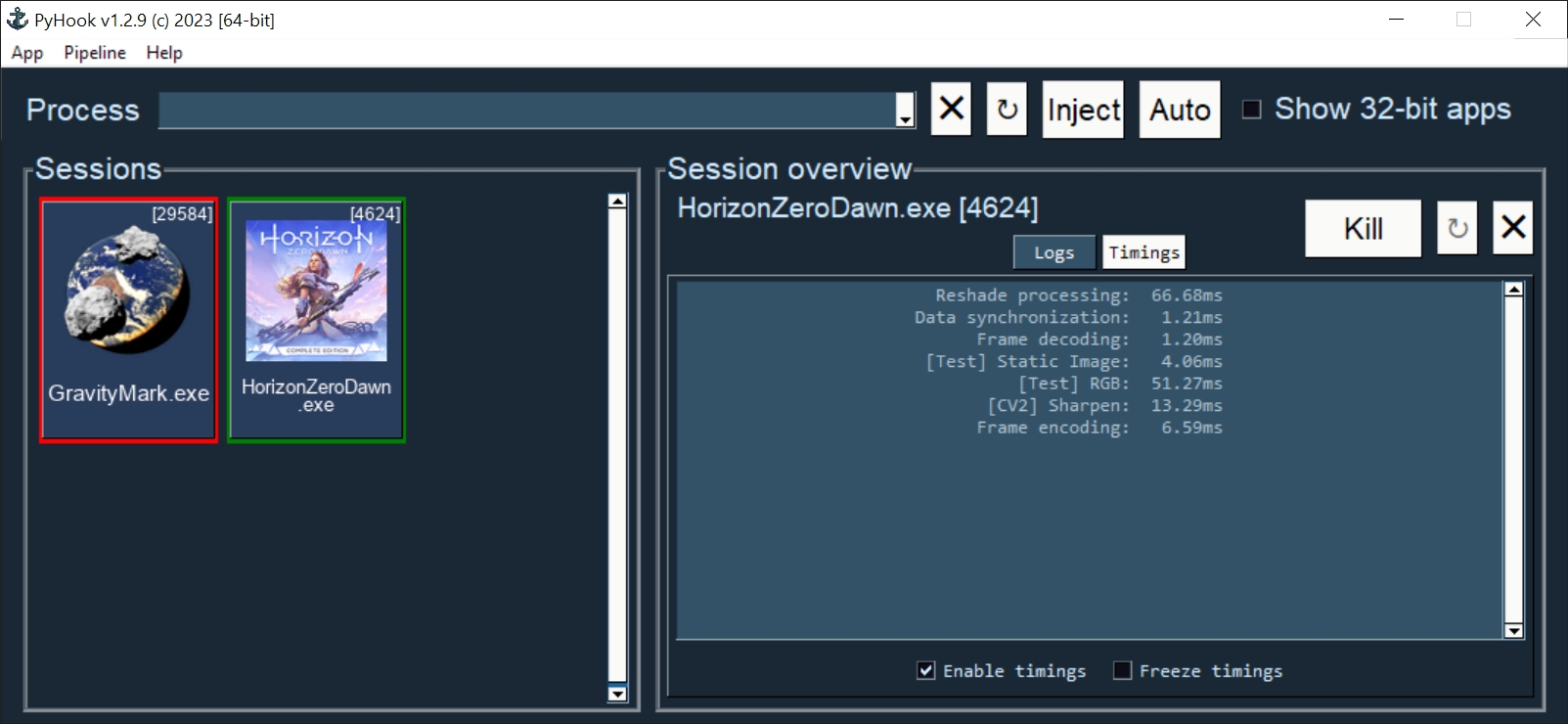

- Session timings for each pipeline processing

| PyHook version | OpenGL | DirectX 9 | DirectX 10 | DirectX 11 | DirectX 12 | Vulkan |

|---|---|---|---|---|---|---|

| 32-bit | ✔ | ✔ | ✔ | ✔ | ✔* | ✔* |

| 64-bit | ✔ | ✔ | ✔ | ✔ | ✔* | ✔* |

ReShade <= 5.4.2 and PyHook <= 0.8.1 whole ImGui is affected by pipelines for these API, due to bug:ReShade <= 5.4.2 and PyHook > 0.8.1 ImGui is not affected but OSD window has cut-out background:Do note that multisampling is not supported by PyHook at all with any API.

Benchmark setup:

- UNIGINE Superposition 64-bit DX11

- 1280x720, windowed, lowest preset

- Intel Core i9 9900KS

- RTX 2080 Super 8GB

- 32GB DDR4 RAM

- Pipelines were run with

CUDAwith few additional runs labelled asCPU

Benchmark command:

$ .\superposition.exe -preset 0 -video_app direct3d11 -shaders_quality 0 -textures_quality 0 ^

-dof 0 -motion_blur 0 -video_vsync 0 -video_mode -1 ^

-console_command "world_load superposition/superposition && render_manager_create_textures 1" ^

-project_name Superposition -video_fullscreen 0 -video_width 1280 -video_height 720 ^

-extern_plugin GPUMonitor -mode 0 -sound 0 -tooltips 1Results:

| PyHook settings | FPS min | FPS avg | FPS max | Score |

|---|---|---|---|---|

| PyHook disabled | 128 | 227 | 331 | 30357 |

| PyHook enabled | 76 | 101 | 120 | 13449 |

30

CUDA15

CPU |

33

CUDA15

CPU |

35

CUDA16

CPU |

4472

CUDA2052

CPU |

|

| 9 | 10 | 10 | 1305 | |

| 6 | 6 | 6 | 783 | |

YOLO Model: Medium

|

28

CUDA4

CPU |

32

CUDA4

CPU |

36

CUDA4

CPU |

4275

CUDA537

CPU |

| 8 | 8 | 8 | 1100 | |

| 9 | 9 | 9 | 1207 | |

Amount: 1.0

|

51 CPU |

61 CPU |

67 CPU |

8128 CPU |

Scale: 1.0

|

4 | 4 | 4 | 579 |

Scale: 1.0

|

14 | 15 | 15 | 1956 |

| 3 | 3 | 4 | 464 | |

| 8 | 8 | 8 | 1074 |

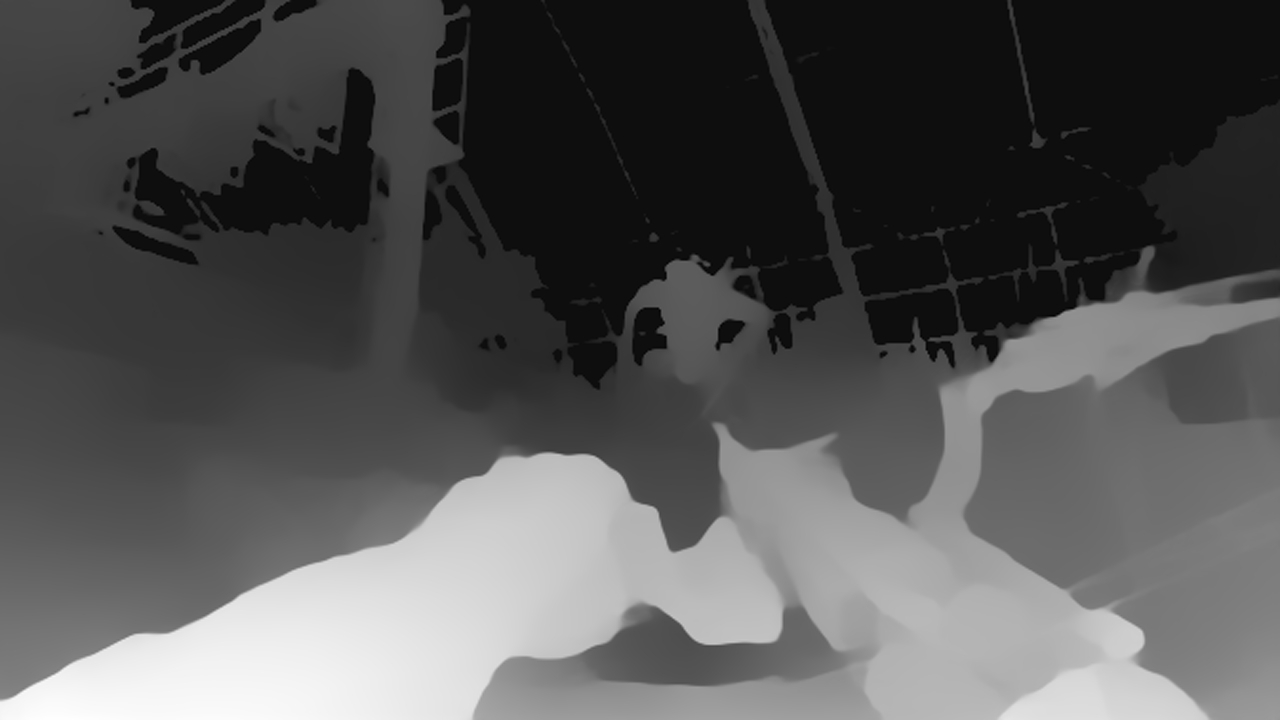

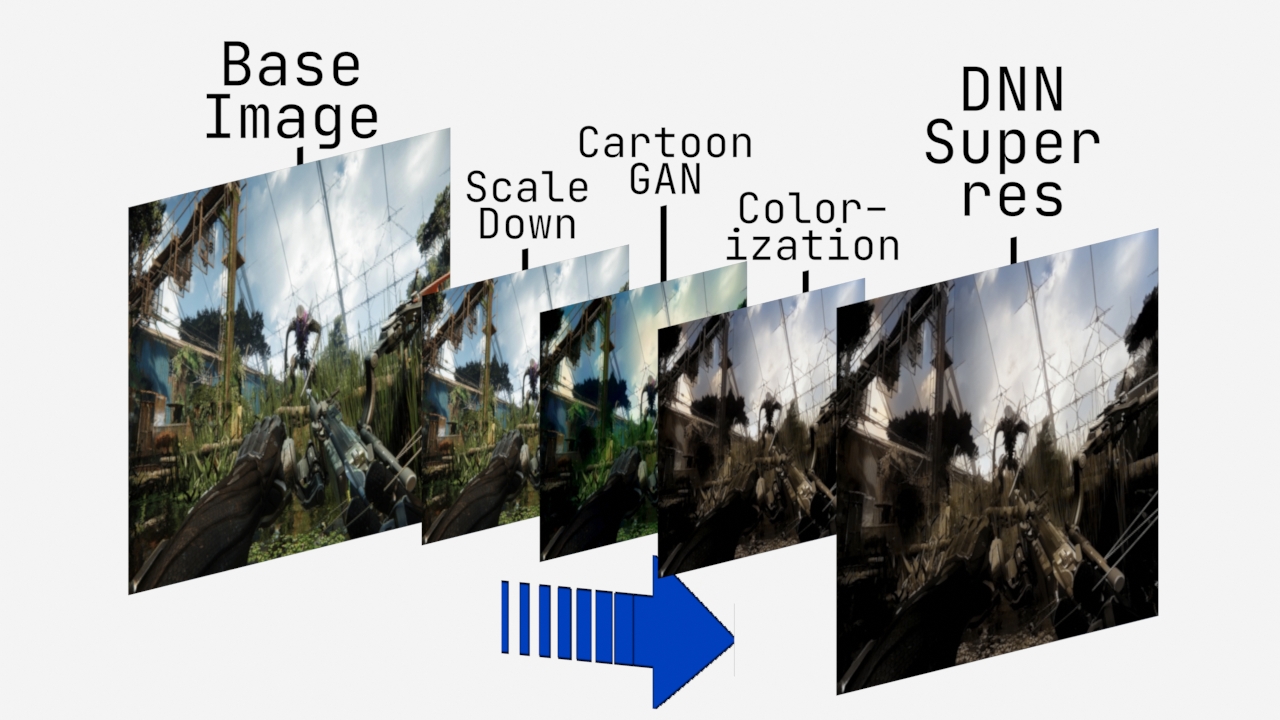

DNN super-resolution is crucial for fast AI pipeline processing. It allows to process multiple AI effects much faster due to smaller input frame.

As shown in the flowchart super-resolution consists of following steps:

- Scale base image down by some factor.

- Process small frame through AI pipelines to achieve much better performance.

- Scale processed frame back using DNN super-resolution.

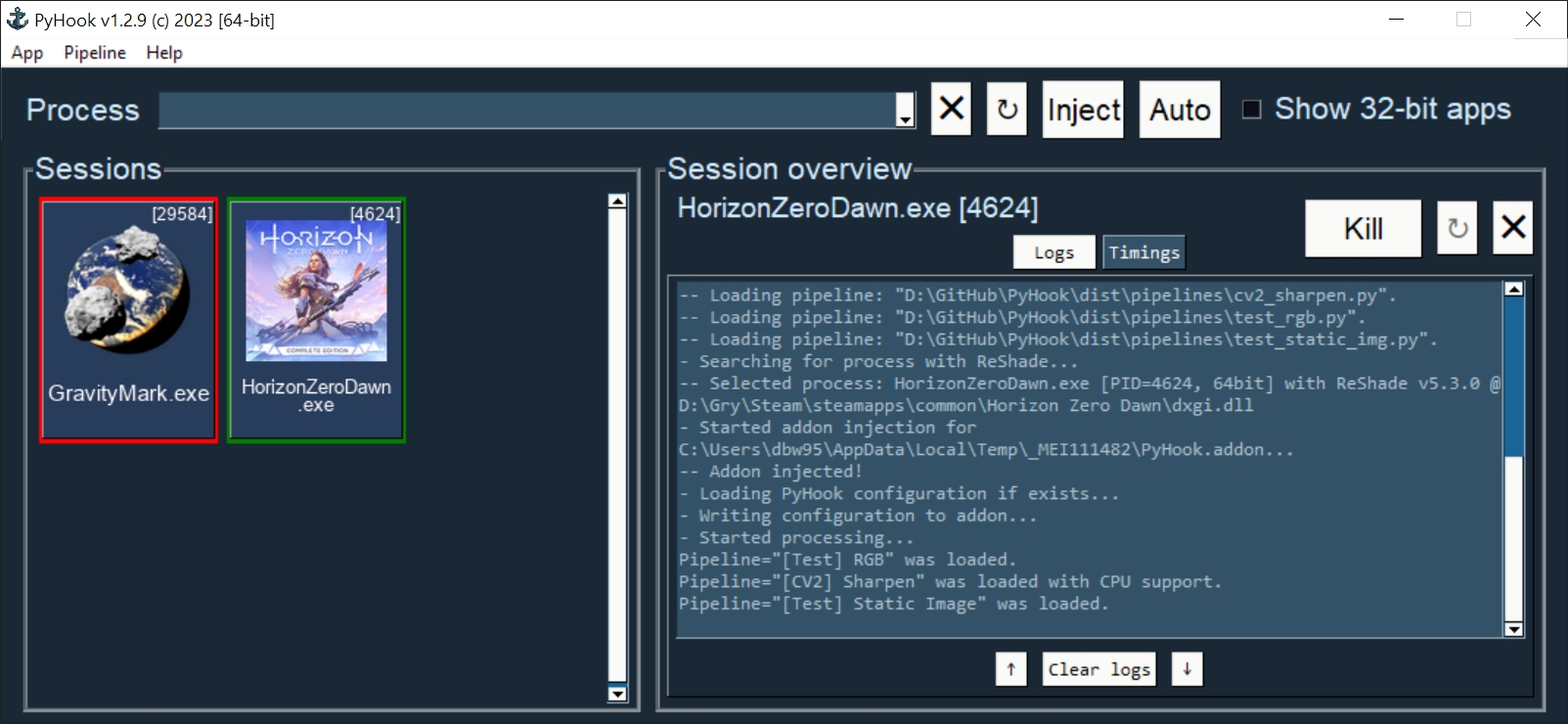

PyHook uses PySimpleGUI to create graphical interface on Windows OS using Tkinter.

GUI consists of few elements:

- Menu bar, where user can change app settings, verify pipelines files, install pipelines requirements, update app and show information about it.

- In 64-bit version of PyHook there is also checkbox that allows to select 32-bit processes.

- Injection bar, where user can find given process automatically or by search bar using process name or id.

- Session list, where user can see all active/dead sessions. Each session have icon (if possible to get from .exe file by Windows API), executable name and process id. Active sessions have green border, dead are using red color.

- Session overview, where user can see live logs and timings (on screenshot below) from given session, kill it or restart (if it was stopped by any reason).

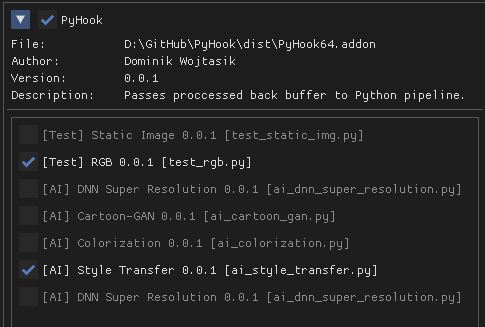

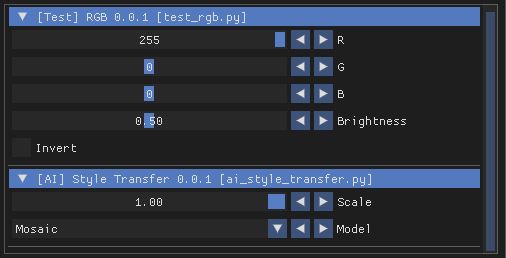

PyHook addon uses ReShade ImGui UI to display list of available pipelines and their respective settings.

To display pipeline list, open ReShade UI and go to Add-ons tab:

Settings for enabled pipelines are displayed below mentioned list:

Supported UI widgets (read more in pipeline template):

- Checkbox

- Slider (integer value)

- Slider (float value)

- Combo box (single value select)

ReShade >= 5.0.0

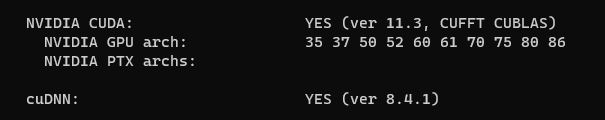

Python ==

3.10.6 for 64-bit* |3.10.4 for 32-bit(for pipelines only)CUDA >= 11.3** (optional for AI pipelines only)

cuDNN >= 8.4.1** (optional for AI pipelines only)

- Only for specific pipelines: Any libraries that are required by pipeline code.Do note that AI pipelines that requires PyTorch or TensorFlow will not work on 32-bit system because libraries does not support it.

- Same as runtime, but for ReShade addon only included headers are needed

- Boost == 1.80.0 (used for Boost.Interprocess shared memory)

- Dear ImGui == 1.86

- NumPy == 1.23.2

- Pillow == 9.2.0

- psutil == 5.9.2

- Pyinjector == 1.1.0

- PySimpleGUI == 4.60.3

- Requests == 2.28.1

- Same as build

- PyInstaller == 5.3

- Python Standard Library List == 0.8.0

You can download selected PyHook archives from Releases.

Download and unpack zip catalog with PyHook executable and pipelines. Releases up to 1.1.2 contains also

*.addonfile.- Prepare Python local environment (read more in Virtual environment) and download pipelines files if needed.Pipelines has own directories with

download.txtfile that has list of files to download. Files can be also downloaded from PyHook app menu. Start game with ReShade installed.

Start PyHook.exe.

For custom pipelines (e.g. AI ones) install requirements (manually or from PyHook app menu) and setup ENV variables or settings that points to Python3 binary in required version.

Available ENV variables (required up to 1.1.2, can be set in settings in newer versions):

LOCAL_PYTHON_32(path to 32-bit Python)LOCAL_PYTHON_64(path to 64-bit Python)LOCAL_PYTHON(fallback path if none of above is set)

Models for pipelines can be downloaded by links from download.txt that are supplied in their respective directory.

You can also download them automatically from PyHook Pipeline menu.

If antivirus detects PyHook as dangerous software add exception for it because it is due to DLL injection capabilities.

To build PyHook addon download Boost and place header files in Addon/include. Then open *.sln project in Visual Studio and build given release.

build.bat in Anaconda Prompt.If any Python package is missing try to update your conda environment and add conda-forge channel:

$ conda config --add channels conda-forgePyHook allows to freely use virtual environment from Anaconda.

To create virtual env (64-bit) u can use following commands in Anaconda Prompt:

$ conda create -n pyhook64env python=3.10.6 -y

$ conda activate pyhook64env

$ conda install pip -y

$ pip install -r any_pipeline.requirements.txt

$ conda deactivateFor 32-bit different Python version have to be used (no new version at the time of writing):

$ set CONDA_FORCE_32BIT=1 // Only for 64-bit system

$ conda create -n pyhook32env python=3.10.4 -y

$ conda activate pyhook32env

$ conda install pip -y

$ pip install -r any_pipeline.requirements.txt

$ conda deactivate

$ set CONDA_FORCE_32BIT= // Only for 64-bit systemWhen virtual environment is ready to be used, copy it's Python executable path and set system environment variables described in Installation.

- Anaconda for virtual environment

- CUDA >= 11.3 (pick last supported by your GPU and pipeline modules)

- cuDNN >= 8.4.1 (pick last supported by your CUDA version)

- Visual Studio >= 16 with C++ support

- git for version control

- CMake for source build

After installation make sure that following environment variables are set:

CUDA_PATH(e.g. "C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.3")PATHwith paths to CUDA + cuDNN and CMake, e.g.:"C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.3\bin""C:\Program Files\CMake\bin"

When requirements are set, run Anaconda Prompt and follow code from file: build_opencv_cuda.bat

After build new environment variables have to be set:

OpenCV_DIR(e.g. "C:\OpenCV\OpenCV-4.6.0")PATH, add path to OpenCV built binaries (e.g. "C:\OpenCV\OpenCV-4.6.0\x64\vc16\bin")OPENCV_LOG_LEVEL"ERROR", to suppress warning messages

build_opencv_cuda.bat has name opencv_build.>>> import cv2

>>> print(cv2.cuda.getCudaEnabledDeviceCount())

>>> print(cv2.getBuildInformation())OpenCV to PyHook. To do this setup LOCAL_PYTHON_64 env or setting to executable file from OpenCV virtual environment.>>> import sys

>>> print(sys.executable)

'C:\\Users\\xyz\\anaconda3\\envs\\opencv_build\\python.exe'DNN Super Resolution pipeline supports both CPU and GPU OpenCV versions and will be used as benchmark.

Benchmark setup:

- Game @ 1280x720 resolution, 60 FPS

- DNN Super Resolution pipeline with FSRCNN model

- Intel Core i9 9900KS

- RTX 2080 Super 8GB

- 32GB DDR4 RAM

Results:

| DNN version | FPS | GPU Usage | GPU Mem MB | CPU Usage % | RAM MB |

|---|---|---|---|---|---|

| CPU 2x | 8 | 2% | 0 | 75 | 368 |

| CPU 3x | 16 | 4% | 0 | 67 | 257 |

| CPU 4x | 24 | 5% | 0 | 60 | 216 |

| GPU CUDA 2x | 35 | 27% | 697 | 12 | 1440 |

| GPU CUDA 3x | 37 | 21% | 617 | 12 | 1354 |

| GPU CUDA 4x | 41 | 17% | 601 | 12 | 1289 |

NOTE: Values in GPU Mem MB and RAM MB contains memory loaded by pipeline only (game not included).

Conclusion:

GPU support allows to achieve over 4x better performance for best quality (2x) DNN super resolution and almost 2x for worst (4x).

1.1.2 version have refactored name of pipeline utils to pipeline_utils.1.1.2 by simply changing import from utils to pipeline_utils.0.9.0 with use of use_fake_modules utils method will not work in 0.8.1.0.8.1 by simply adding use_fake_modules definition directly in pipeline file.- Updated copyright note.

- Added license info in about window.

- Now 64-bit PyHook supports AI pipelines for 32-bit applications.

- Added information about supported platform (either 32-bit, 64-bit or both) to pipelines.

- Added possibility to inject into 32-bit application in 64-bit PyHook.

- Fixed OS architecture test.

- Improved popup windows responsiveness.

- Added timings for pipeline processing.

- Added process list filtration by PyHook architecture.

- Added child window centering in parent.

- Added automatic requirements installation.

- Added settings for local python paths in GUI.

- Fixed timeout for subprocess communication.

- Added automatic and manual app update.

- Fixed re-download issues with autodownload and cancelling.

- Process combo box now displays dropdown options during writing.

- Added pipeline files downloading cancel confirmation popup.

- Added built-in addon DLL inside executable.

- Fixed invalid icon readout.

- Updated app runtime icon.

- Fixed window flickering on move.

- Added confirmation popup on application exit if any PyHook session is active.

- Increased speed of automatic injection.

- Fixed frozen bundle issues.

- Added downloading window.

- Added settings window.

- Added about window.

- Added images to GUI.

- Added GUI with multiple PyHook sessions support.

- Improved error handling for pipeline processing.

- Replaced old depth estimation pipeline with new implementation using https://github.com/isl-org/MiDaS

- Fixed initial pipeline values loaded from file.

- Updated pipelines with information about selected device (CPU or CUDA).

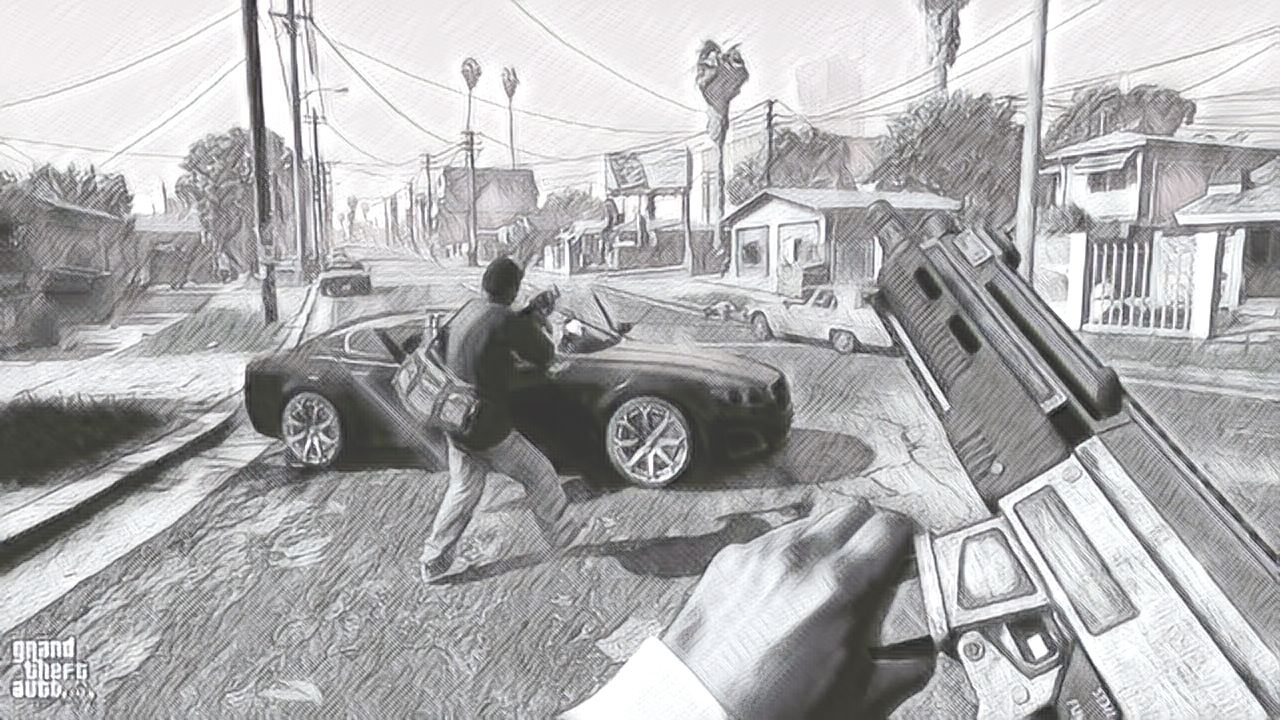

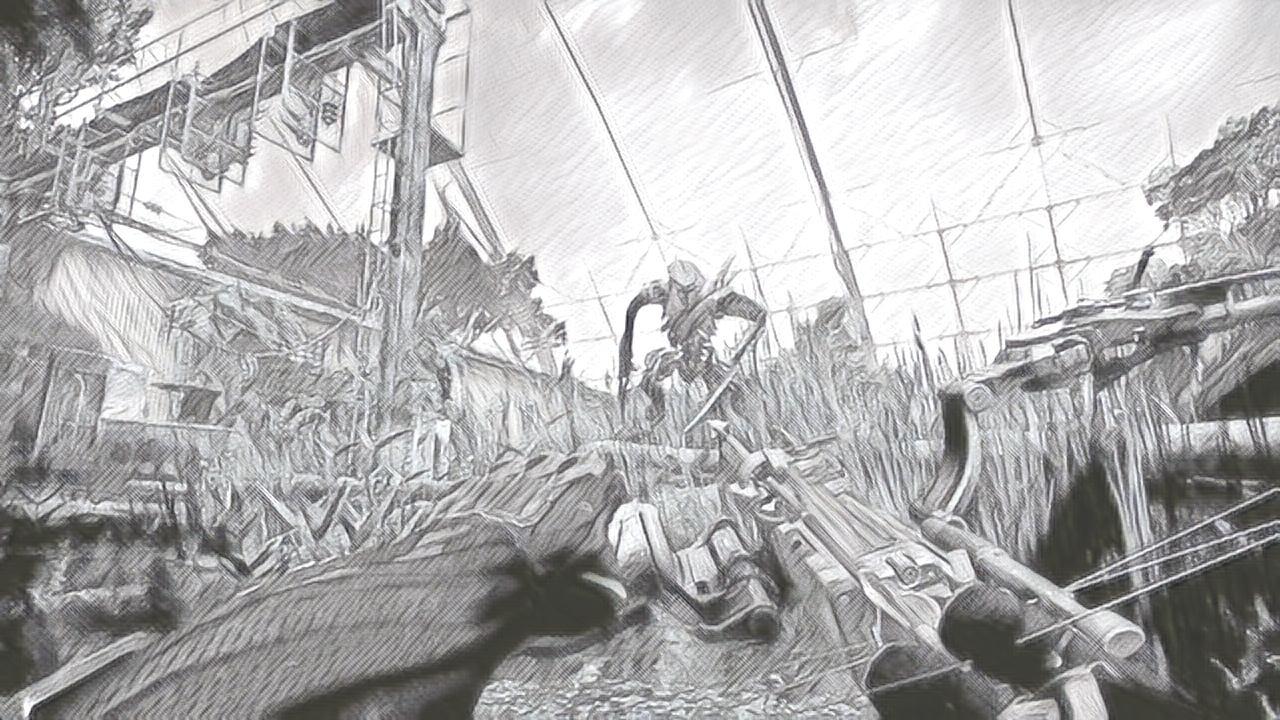

- Added OpenCV sharpen pipeline with CPU support.

- Added PyHook settings file.

- Fixed ImGui being affected for ReShade version up to 5.4.2.

- Added AI depth estimation pipeline example using https://github.com/wolverinn/Depth-Estimation-PyTorch

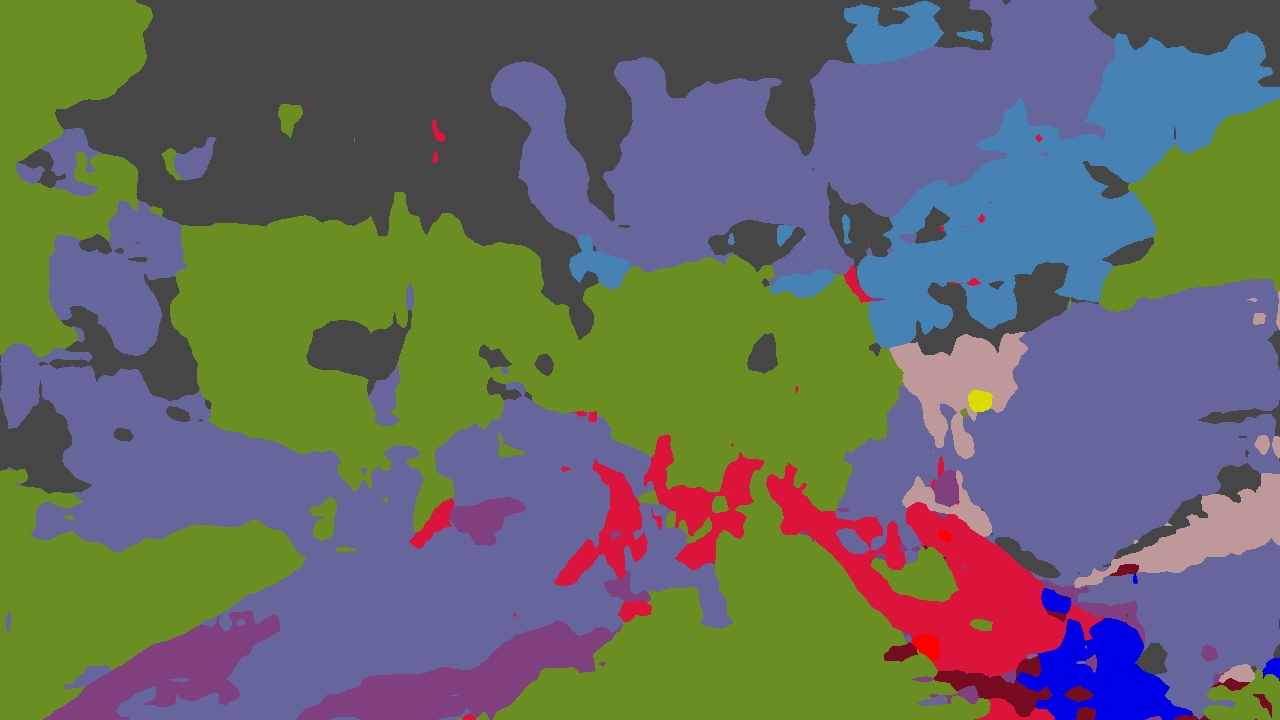

- Added AI semantic segmentation pipeline example using https://github.com/XuJiacong/PIDNet

- Fixed float inaccuracy in pipeline settings.

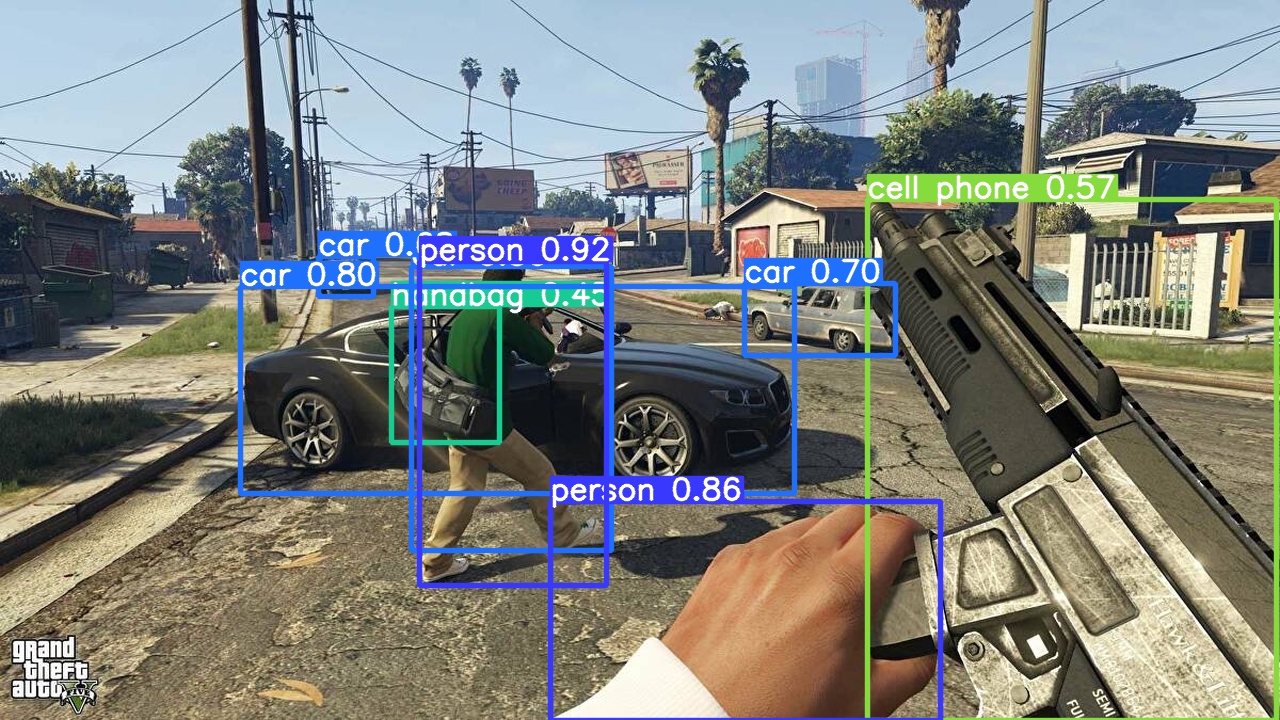

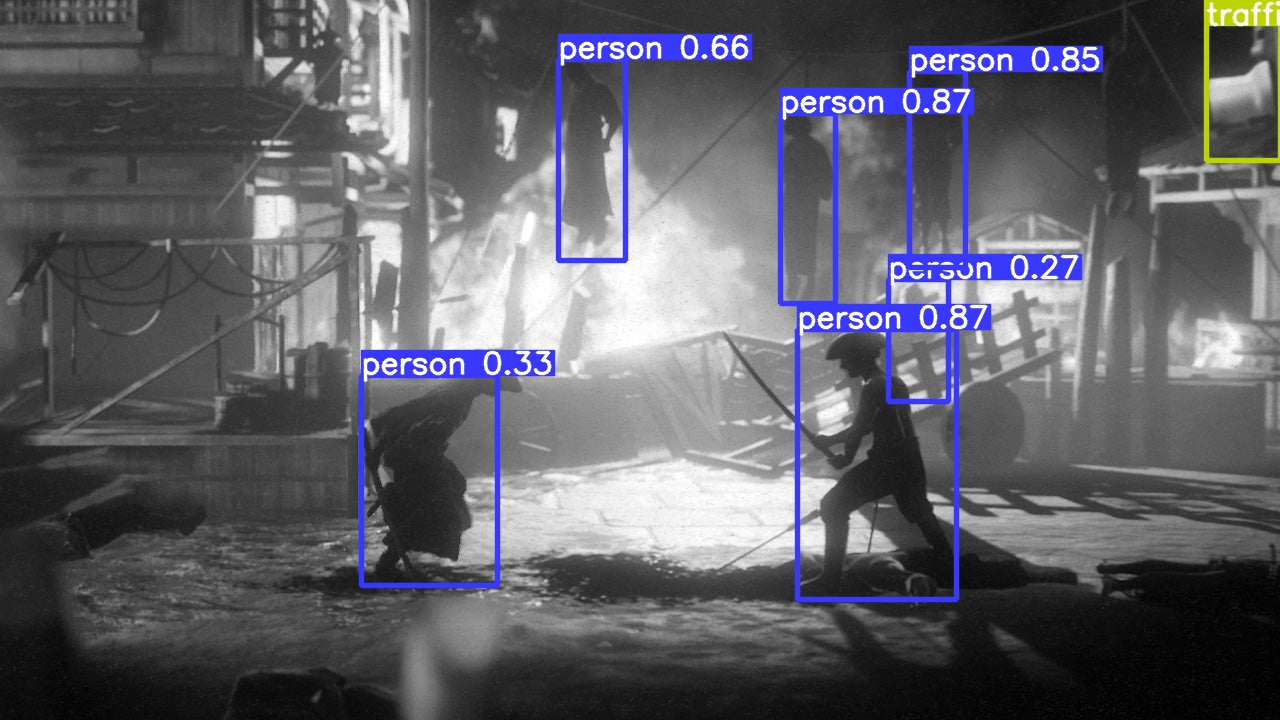

- Added AI object detection pipeline example using https://github.com/ultralytics/yolov5

- Added AI style transfer pipeline example using https://github.com/zhanghang1989/PyTorch-Multi-Style-Transfer

- Added automatic pipeline files download on startup.

- Added support for DirectX 12 and Vulkan with fallback for older ReShade version.

- Added support for Vulkan DLL names.

- Added AI super resolution example using OpenCV DNN super resolution.

- Added multistage (multiple passes per frame) pipelines support.

- Improved error handling in ReShade addon.

- Added error notification on settings save.

- Improved synchronization between PyHook and addon.

- Added OpenGL support.

- Added multiple texture formats support.

- Added logs removal from DLL loading.

- Added JSON settings for pipelines.

- Added combo box selection in settings UI.

- Added AI colorization pipeline example using https://github.com/richzhang/colorization

- Added AI Cartoon-GAN pipeline example using https://github.com/FilipAndersson245/cartoon-gan

- Added dynamic modules load from local Python environment.

- Added fallback to manual PID supply.

- Updated pipeline template.

- Added new callbacks for settings changes (before and after change).

- Added ReShade UI for pipeline settings in ImGui.

- Added pipeline utils to faster pipeline creation.

- Added dynamic pipeline variables parsing.

- Added shared memory segment for pipeline settings.

- Added AI style transfer pipeline example using https://github.com/mmalotin/pytorch-fast-neural-style-mobilenetV2

- Added pipeline lifecycle support (load/process/unload).

- Added pipeline ordering and selection GUI in ReShade addon UI.

- Added shared memory for configuration.

- Added multisampling error in PyHook.

- Added pipeline processing for dynamic effects loading.

- Added shared data refresh on in-game settings changes.

- Disabled multisampling on swapchain creation.

- Fixed error display on app exit.

- Initial version.

The pipeline files code benefits from prior work and implementations including:

- Fast neural style with MobileNetV2 bottleneck blocks

- Cartoon-GAN

- Colorful Image Colorization

- PyTorch-Style-Transfer

- PIDNet: A Real-time Semantic Segmentation Network Inspired from PID Controller

- Depth-Estimation-PyTorch

- Towards Robust Monocular Depth Estimation: Mixing Datasets for Zero-shot Cross-dataset Transfer

@misc{https://doi.org/10.48550/arxiv.1603.08155,

doi = {10.48550/ARXIV.1603.08155},

url = {https://arxiv.org/abs/1603.08155},

author = {Johnson, Justin and Alahi, Alexandre and Fei-Fei, Li},

title = {Perceptual Losses for Real-Time Style Transfer and Super-Resolution},

publisher = {arXiv},

year = {2016},

copyright = {arXiv.org perpetual, non-exclusive license}

}

@article{https://doi.org/10.48550/arxiv.1801.04381,

doi = {10.48550/ARXIV.1801.04381},

url = {https://arxiv.org/abs/1801.04381},

author = {Sandler, Mark and Howard, Andrew and Zhu, Menglong and Zhmoginov, Andrey and Chen, Liang-Chieh},

title = {MobileNetV2: Inverted Residuals and Linear Bottlenecks},

publisher = {arXiv},

year = {2018},

copyright = {arXiv.org perpetual, non-exclusive license}

}

@misc{https://doi.org/10.48550/arxiv.1508.06576,

doi = {10.48550/ARXIV.1508.06576},

url = {https://arxiv.org/abs/1508.06576},

author = {Gatys, Leon A. and Ecker, Alexander S. and Bethge, Matthias},

title = {A Neural Algorithm of Artistic Style},

publisher = {arXiv},

year = {2015},

copyright = {arXiv.org perpetual, non-exclusive license}

}

@misc{https://doi.org/10.48550/arxiv.1607.08022,

doi = {10.48550/ARXIV.1607.08022},

url = {https://arxiv.org/abs/1607.08022},

author = {Ulyanov, Dmitry and Vedaldi, Andrea and Lempitsky, Victor},

title = {Instance Normalization: The Missing Ingredient for Fast Stylization},

publisher = {arXiv},

year = {2016},

copyright = {arXiv.org perpetual, non-exclusive license}

}

@misc{andersson2020generative,

title={Generative Adversarial Networks for photo to Hayao Miyazaki style cartoons},

author={Filip Andersson and Simon Arvidsson},

year={2020},

eprint={2005.07702},

archivePrefix={arXiv},

primaryClass={cs.GR}

}

@inproceedings{zhang2016colorful,

title={Colorful Image Colorization},

author={Zhang, Richard and Isola, Phillip and Efros, Alexei A},

booktitle={ECCV},

year={2016}

}

@article{zhang2017real,

title={Real-Time User-Guided Image Colorization with Learned Deep Priors},

author={Zhang, Richard and Zhu, Jun-Yan and Isola, Phillip and Geng, Xinyang and Lin, Angela S and Yu, Tianhe and Efros, Alexei A},

journal={ACM Transactions on Graphics (TOG)},

volume={9},

number={4},

year={2017},

publisher={ACM}

}

@article{zhang2017multistyle,

title={Multi-style Generative Network for Real-time Transfer},

author={Zhang, Hang and Dana, Kristin},

journal={arXiv preprint arXiv:1703.06953},

year={2017}

}

@misc{https://doi.org/10.48550/arxiv.1603.03417,

doi = {10.48550/ARXIV.1603.03417},

url = {https://arxiv.org/abs/1603.03417},

author = {Ulyanov, Dmitry and Lebedev, Vadim and Vedaldi, Andrea and Lempitsky, Victor},

title = {Texture Networks: Feed-forward Synthesis of Textures and Stylized Images},

publisher = {arXiv},

year = {2016},

copyright = {arXiv.org perpetual, non-exclusive license}

}

@InProceedings{Gatys_2016_CVPR,

author = {Gatys, Leon A. and Ecker, Alexander S. and Bethge, Matthias},

title = {Image Style Transfer Using Convolutional Neural Networks},

booktitle = {Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2016}

}

@misc{xu2022pidnet,

title={PIDNet: A Real-time Semantic Segmentation Network Inspired from PID Controller},

author={Jiacong Xu and Zixiang Xiong and Shankar P. Bhattacharyya},

year={2022},

eprint={2206.02066},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@misc{https://doi.org/10.48550/arxiv.1612.03144,

doi = {10.48550/ARXIV.1612.03144},

url = {https://arxiv.org/abs/1612.03144},

author = {Lin, Tsung-Yi and Dollár, Piotr and Girshick, Ross and He, Kaiming and Hariharan, Bharath and Belongie, Serge},

title = {Feature Pyramid Networks for Object Detection},

publisher = {arXiv},

year = {2016},

copyright = {arXiv.org perpetual, non-exclusive license}

}

@misc{https://doi.org/10.48550/arxiv.1604.03901,

doi = {10.48550/ARXIV.1604.03901},

url = {https://arxiv.org/abs/1604.03901},

author = {Chen, Weifeng and Fu, Zhao and Yang, Dawei and Deng, Jia},

title = {Single-Image Depth Perception in the Wild},

publisher = {arXiv},

year = {2016},

copyright = {arXiv.org perpetual, non-exclusive license}

}

@inproceedings{NIPS2014_7bccfde7,

author = {Eigen, David and Puhrsch, Christian and Fergus, Rob},

booktitle = {Advances in Neural Information Processing Systems},

editor = {Z. Ghahramani and M. Welling and C. Cortes and N. Lawrence and K.Q. Weinberger},

pages = {},

publisher = {Curran Associates, Inc.},

title = {Depth Map Prediction from a Single Image using a Multi-Scale Deep Network},

url = {https://proceedings.neurips.cc/paper/2014/file/7bccfde7714a1ebadf06c5f4cea752c1-Paper.pdf},

volume = {27},

year = {2014}

}

@misc{https://doi.org/10.48550/arxiv.1708.08267,

doi = {10.48550/ARXIV.1708.08267},

url = {https://arxiv.org/abs/1708.08267},

author = {Fu, Huan and Gong, Mingming and Wang, Chaohui and Tao, Dacheng},

title = {A Compromise Principle in Deep Monocular Depth Estimation},

publisher = {arXiv},

year = {2017},

copyright = {arXiv.org perpetual, non-exclusive license}

}

@ARTICLE {Ranftl2022,

author = "Ren\'{e} Ranftl and Katrin Lasinger and David Hafner and Konrad Schindler and Vladlen Koltun",

title = "Towards Robust Monocular Depth Estimation: Mixing Datasets for Zero-Shot Cross-Dataset Transfer",

journal = "IEEE Transactions on Pattern Analysis and Machine Intelligence",

year = "2022",

volume = "44",

number = "3"

}

@article{Ranftl2021,

author = {Ren\'{e} Ranftl and Alexey Bochkovskiy and Vladlen Koltun},

title = {Vision Transformers for Dense Prediction},

journal = {ICCV},

year = {2021},

}

@misc{rw2019timm,

author = {Ross Wightman},

title = {PyTorch Image Models},

year = {2019},

publisher = {GitHub},

journal = {GitHub repository},

doi = {10.5281/zenodo.4414861},

howpublished = {\url{https://github.com/rwightman/pytorch-image-models}}

}