A Google Summer of Code 2021 Project Repository.

This project aims to demonstrate quantum machine learning's potential, specifically Quantum Convolutional Neural Network (QCNN), in HEP events classification from particle image data.

The code used in the research is wrapped as an open-source package to ease future research in this field.

Check the How to Use section to learn more about it.

- Organization

- Student

- Mentors

- Project Details

One of the challenges in High-Energy Physics (HEP) is events classification, which is to predict whether an image of particle jets belongs to events being sought after or just background signals. Classical Convolutional Neural Network (CNN) has been proven a powerful algorithm in image classification, including jets image. As quantum computers promise many advantages over classical computing, comes a question on whether quantum machine learning (QML) can give any improvement in solving the problem. This project aims to demonstrate quantum machine learning's potential, specifically Quantum Convolutional Neural Network (QCNN), in HEP events classification from image data. The code used in the research is wrapped as an open-source package to ease future research in this field.

This package is a TensorFlow Quantum implementation of quantum convolution and classifier with Data Re-uploading[3] ansatz. Both are wrapped as Keras layers that can easily be integrated into other Keras layers (classical and/or quantum), acting as building blocks for Quantum Convolutional Neural Networks (both hybrid and fully quantum). The model can be trained using Keras API.

git clone https://github.com/eraraya-ricardo/qcnn-hep.git

cd qcnn-hep

python -m pip install -r requirements.txt

python setup.pyFor a more detail step-by-step installation, please refer to Docs and Tutorial.

- Week 1: Looking and getting used to the dataset, train a classical ResNet[1] model as a baseline.

- Week 2: Coding the graph-convolution preprocessing[2] and Quantum Conv layer with data re-uploading[3] PQC.

- Week 3: Testing the first iteration of the QCNN model, coding the parallelized convolution, testing ResNet with 8x8 images.

- Week 4: Tested the Kaggle platform, tested the parallelized convolution, trained QCNN with varying hyperparameters.

- Week 5: Tested the classical CNN and Fully-connected NN, started to train the QCNN v0.1.0 with varying filter size & stride, coded the new ansatz for quantum convolution layer based on [4].

- Week 6: Tested the new quantum convolution ansatz[4], try to combine ideas from data re-uploading circuit to the new ansatz, presented a short summary about the project at the MCQST Student Conference.

- Week 7: Tested the QCNN v0.1.1 on MNIST[5] and LArTPC[4] dataset.

- Week 8: Wrapped the code in the development notebooks as a Python package.

- Week 9: Tested the QCNN v0.1.1 and classical CNN on the Quark-Gluon[6] dataset.

- Week 10: Cleaned up the repository, README, and the docs/tutorial notebook.

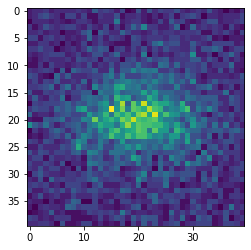

Averages of Photon (left) and Electron (right) image samples from the dataset.

The dataset contains images from two types of particles: photons (0) and electrons (1) captured by the ECAL detector.

- Each pixel corresponds to a detector cell.

- The intensity of the pixel corresponds to how much energy is measured in that cell.

- In total, there are 498,000 samples, equally distributed between the two classes.

- The size of the images are 32x32.

If you are interested on using the datast for your study, contact me and I can try to connect you to the people at ML4Sci who have the dataset.

Liquid Argon Time Projection Chamber (LArTPC) Dataset[4]

An image sample for each class from LArTPC dataset.

The dataset contains images of simulated particle activities (μ+, e−, p+, π+, π0, γ) in a LArTPC detector. This dataset is prepared by the authors for study in [4].

- The images have a resolution of 480 x 600 pixels, where each pixel in the x-axis represents a single wire and each pixel in the y-axis represents a sampling time tick.

- Colors in the images represent the sizes of the ionization energy loss along the particle trajectories when measured by LArTPC’s wire planes.

- In total, there are 100 samples for each class.

- Each particle’s momentum is set such that the mean range of the particle is about 2 meters, so the classification is not sensitive to the image size.

- In this study, the images are scaled to 30x30, prepared by the original authors of the dataset.

- You can check reference [4] for more details of the dataset.

The dataset can be obtained from the original authors of [4] upon reasonable request.

Quark-Gluon Dataset[6]

Averages of Gluon (left) and Quark (right) image samples of the track channel from the subdataset of 10k samples.

The dataset contains images of simulated quark and gluon jets. The image has three channels, the first channel is the reconstructed tracks of the jet, the second channel is the images captured by the electromagnetic calorimeter (ECAL) detector, and the third channel is the images captured by the hadronic calorimeter (HCAL) detector.

- The images have a resolution of 125 x 125 pixels (for every channel).

- Since the original size of 125 x 125 pixels is too large for quantum computing simulation, we cropped the images into certain size. For now, we limit the current size to 40 x 40 pixels.

- In this study, we focus mostly on the tracks channel.

- You can check reference [6] for more details of the dataset.

If you are interested on using the datast for your study, contact me and I can try to connect you to the people at ML4Sci who have the dataset.

MNIST Dataset[5]

An image sample for each class from MNIST dataset.

The dataset contains images of grayscale (8 bit) handwritten digits, 28 x 28 pixels, has a training set of 60,000 examples, and a test set of 10,000 examples.

It can be obtained from [5].

The whole project is run on Google Colab with GPU vary between V100, P100, or T4. The runtime listed in the Research section might not be too accurate as the GPU used vary between runs. The main benchmarking metric is the Test AUC.

| Notebook Version Name | Notes | Num. Trainable Params | Test AUC | Runtime (secs per epoch) |

|---|---|---|---|---|

| ResNet v2 | Whole samples with 15% for test samples, 200 epochs, 128 batch size, classical preprocessing: MinMax scaling -> subtract mean, optimizer: Adam(learning_rate=lr_schedule) | 295,074 | 0.80 | - |

| QCNN v0.1.0 (data re-uploading circuit) | Whole samples with 15% for test samples, 10 epochs, 128 batch size, 1 qubits, 1 layers, filter size = [3, 3], stride = [1, 1], followed by classical head [8, 2] with activation [relu, softmax], classical preprocessing: crop to 8x8 -> standard scaling, optimizer: Adam(learning_rate=lr_schedule) | 190 | 0.730 | (about 1.5 hours/epoch) |

| ResNet v2 | Whole samples with 15% for test samples, 200 epochs, 128 batch size, classical preprocessing: crop to 8x8 -> MinMax scaling -> subtract mean, optimizer: Adam(learning_rate=lr_schedule) | 295,074 | 0.63 (overfit, train AUC = 0.80) | - |

| QCNN v0.2.0 (data re-uploading circuit) | Whole samples with 15% for test samples, 10 epochs, 128 batch size, 2 qubits, 2 layers, filter size = [2, 2], stride = [2, 1], followed by classical head [8, 2] with activation [relu, softmax], classical preprocessing: crop to 8x8 -> standard scaling, optimizer: Adam(learning_rate=lr_schedule) | 194 | 0.68 | - |

| Classical CNN | Whole samples with 15% for test samples, 10 epochs, 128 batch size, filter size = [3, 3], stride = [1, 1], num. of filters = [2, 1], conv activation = [relu, relu], use_bias = [True, True], followed by classical head [8, 2] with activation [relu, softmax], classical preprocessing: crop to 8x8 -> standard scaling, optimizer: Adam(learning_rate=lr_schedule) | 193 | 0.738 | 13 |

| Classical Fully-connected NN | Whole samples with 15% for test samples, 10 epochs, 128 batch size, num. of nodes = [3, 2], activation [relu, softmax], classical preprocessing: crop to 8x8 -> standard scaling -> flatten to 64, optimizer: Adam(learning_rate=lr_schedule) | 203 | 0.691 | 10 |

QCNN v0.1.1 (data re-uploading circuit[3])

10k samples with 15% for test samples, 200 epochs, 128 batch size, varying qubits, varying layers, filter size = [3, 3], stride = [1, 1], followed by classical head [8, 2] with activation [relu, softmax], classical preprocessing: crop to 8x8 -> standard scaling

optimizer: Adam(learning_rate=lr_schedule)

| Num. Qubits | Num. Layers | Num. Trainable Params | Train AUC | Test AUC | Runtime (secs per epoch) |

|---|---|---|---|---|---|

| 1 | 1 | 190 | 0.689 | 0.636 | ±80 |

| 1 | 2 | 226 | 0.716 | 0.666 | ±165 |

| 1 | 3 | 262 | 0.687 | 0.622 | ±330 |

| 1 | 4 | 298 | 0.691 | 0.607 | ±370 |

| 2 | 1 | 226 | 0.687 | 0.661 | ±200 |

| 2 | 2 | 298 | 0.710 | 0.645 | ±420 |

| 3 | 1 | 262 | 0.691 | 0.655 | ±350 |

| 3 | 2 | 370 | 0.707 | 0.636 | ±670 |

Validation AUC for Varying the Number of Layers and Qubits (0.0 = not tested).

10k samples with 15% for test samples, 200 epochs, 128 batch size, varying qubits, varying layers, filter size = [2, 2], stride = [1, 1], followed by classical head [8, 2] with activation [relu, softmax], classical preprocessing: crop to 8x8 -> standard scaling

| Num. Qubits | Num. Layers | Num. Trainable Params | Train AUC | Test AUC | Runtime (secs per epoch) |

|---|---|---|---|---|---|

| 1 | 1 | 338 | 0.650 | 0.623 | ±120 |

2 classes, 160 training samples (80 per class), 40 testing samples (20 per class), 200 epochs, 16 batch size, varying qubits, varying layers, filter size = [3, 2], stride = [2, 2], followed by classical head [2] with activation [softmax], classical preprocessing: log scaling -> MinMax scaling

optimizer: RMSProp(learning_rate=0.01, rho=0.99, epsilon=1e-08)

| Classes | Num. Qubits | Num. Layers | Num. Trainable Params | Train AUC | Test AUC | Train Accuracy | Test Accuracy | Runtime (secs per epoch) |

|---|---|---|---|---|---|---|---|---|

| e- vs μ+ | 1 | 1 | 130 | 1.0 | 0.977 | 1.0 | 0.925 | ±6 |

| e- vs μ+ | 1 | 2 | 160 | 1.0 | 0.971 | 1.0 | 0.925 | ±14 |

| e- vs μ+ | 2 | 2 | 220 | 1.0 | 0.996 | 1.0 | 0.950 | ±27 |

| p+ vs μ+ | 1 | 1 | 130 | 1.0 | 0.980 | 1.0 | 0.950 | ±6 |

| p+ vs μ+ | 2 | 2 | 220 | 1.0 | 0.969 | 1.0 | 0.925 | ±25 |

| π+ vs μ+ | 1 | 1 | 130 | 1.0 | 0.928 | 1.0 | 0.850 | ±6 |

| π+ vs μ+ | 1 | 2 | 160 | 1.0 | 0.921 | 1.0 | 0.875 | ±11 |

| π+ vs μ+ | 1 | 3 | 190 | 1.0 | 0.863 | 1.0 | 0.825 | ±18 |

| π+ vs μ+ | 2 | 1 | 160 | 1.0 | 0.890 | 1.0 | 0.925 | ±12 |

| π+ vs μ+ | 2 | 2 | 220 | 1.0 | 0.977 | 1.0 | 0.950 | ±24 |

| π+ vs μ+ | 2 | 3 | 280 | 1.0 | 0.940 | 1.0 | 0.850 | ±36 |

| π+ vs μ+ | 3 | 1 | 190 | 1.0 | 0.896 | 1.0 | 0.875 | ±18 |

| π+ vs μ+ | 3 | 2 | 280 | 1.0 | 0.971 | 1.0 | 0.925 | ±35 |

| π+ vs μ+ | 3 | 3 | 370 | 1.0 | 0.954 | 1.0 | 0.850 | ±55 |

-----

Comparison of Best Models.

The model developed in this project (*) produced similar results with the QCNN and classical CNN models from [4] (†) with less number of qubits and trainable parameters. Reference [4] didn't report their AUC scores.

| Classes | Model | Num. Qubits | Num. Trainable Params | Train Accuracy | Test Accuracy | Train AUC | Test AUC |

|---|---|---|---|---|---|---|---|

| e- vs μ+ | QCNN-DRC* QCNN† CNN† |

2 9 (classical) |

220 472 498 |

1.0 1.0 0.9938 |

0.950 0.925 0.950 |

1.0 - - |

0.996 - - |

| p+ vs μ+ | QCNN-DRC* QCNN† CNN† |

1 9 (classical) |

130 472 498 |

1.0 1.0 0.9125 |

0.950 0.975 0.80 |

1.0 - - |

0.980 - - |

| π+ vs μ+ | QCNN-DRC* QCNN† CNN† |

2 9 (classical) |

220 472 498 |

1.0 0.9688 0.975 |

0.950 0.975 0.825 |

1.0 - - |

0.977 - - |

This part is still a working progress. A much higher specs computational device (more RAMs) is needed for training the model because this dataset is huge.

Early testing with small samples showed a promising results. The training accuracies and AUCs are high, indicates that the model was able to learn how to differentiate the data. Low test metrics indicates overfitting, the model failed to generalize well -> we need to train the model on larger number of samples.

2 classes, 850 training samples (425 per class), 150 testing samples (75 per class), 200 epochs, 128 batch size, varying qubits, varying layers, filter size = [3, 3], stride = [2, 1], followed by classical head [8, 2] with activation [relu, softmax], classical preprocessing: crop to 40x40 -> log scaling -> MinMax scaling

optimizer: Adam(learning_rate=lr_schedule)

Averages of Gluon (left) and Quark (right) image samples of the track channel from the subdataset of 1k samples after cropping to 40 x 40.

| Num. Qubits | Num. Layers | Num. Trainable Params | Train AUC | Test AUC | Train Accuracy | Test Accuracy | Runtime (secs per epoch) |

|---|---|---|---|---|---|---|---|

| 1 | 1 | 2374 | 0.712 | 0.531 | 0.659 | 0.567 | 128 |

| 1 | 2 | 2410 | 0.928 | 0.559 | 0.846 | 0.567 | 370 |

| 1 | 3 | 2446 | 0.992 | 0.571 | 0.956 | 0.560 | 670 |

| 2 | 1 | 2410 | 0.829 | 0.663 | 0.754 | 0.640 | 450 |

2 classes, 400 training samples (200 per class), 1000 testing samples (500 per class), 10 epochs, 32 batch size, varying qubits, varying layers, filter size = [3, 3], stride = [2, 2], followed by classical head [8, 2] with activation [relu, softmax], classical preprocessing: crop to 27x27 -> pixels range [0, 1] (divide all pixels by 255)

optimizer: Adam(learning_rate=lr_schedule)

| Classes | Num. Qubits | Num. Layers | Num. Trainable Params | Train AUC | Test AUC | Train Accuracy | Test Accuracy | Runtime (secs per epoch) |

|---|---|---|---|---|---|---|---|---|

| 0 vs 1 | 1 | 1 | 350 | 0.999 | 0.999 | 0.99 | 0.99 | ±40 |

-----

2 classes, 400 training samples (200 per class), 1000 testing samples (500 per class), 200 epochs, 32 batch size, varying qubits, varying layers, filter size = [3, 3], stride = [2, 2], followed by classical head [2] with activation [softmax], classical preprocessing: pixels range [0, 1] (divide all pixels by 255)

optimizer: Adam(learning_rate=0.001)

| Classes | Num. Qubits | Num. Layers | Num. Trainable Params | Train AUC | Test AUC | Train Accuracy | Test Accuracy | Runtime (secs per epoch) |

|---|---|---|---|---|---|---|---|---|

| 0 vs 1 | 1 | 1 | 110 | 0.9999 | 0.9999 | 0.998 | 0.996 | ±41 |

| 3 vs 6 | 1 | 1 | 110 | 0.999 | 0.998 | 0.998 | 0.985 | ±55 |

| 4 vs 7 | 1 | 1 | 110 | 0.9999 | 0.993 | 0.998 | 0.967 | ±42 |

| 8 vs 9 | 1 | 1 | 110 | 0.997 | 0.978 | 0.975 | 0.927 | ±41 |

| 2 vs 5 | 1 | 1 | 110 | 0.9997 | 0.994 | 0.995 | 0.962 | ±41 |

QCNN v0.3.0 (circuit from [4])

10k samples with 15% for test samples, 200 epochs, 128 batch size, varying layers, filter size = [3, 2], stride = [1, 1], followed by classical head [8, 2] with activation [relu, softmax], classical preprocessing: crop to 8x8 -> convert all pixels' value with arctan function

optimizer: Adam(learning_rate=lr_schedule)

| Num. Layers | Num. Trainable Params | Train AUC | Test AUC | Runtime (secs per epoch) |

|---|---|---|---|---|

| 1 | 265 | 0.613 | 0.586 | ±300 |

| 2 | 304 | 0.663 | 0.644 | ±570 |

| 3 | 343 | 0.647 | 0.630 | ±780 |

| 4 | 382 | 0.653 | 0.635 | ±950 |

10k samples with 15% for test samples, 200 epochs, 128 batch size, filter size = [3, 3], stride = [1, 1], conv activation = [relu, relu], use_bias = [True, True], followed by classical head [8, 2] with activation [relu, softmax], classical preprocessing: crop to 8x8 -> standard scaling

optimizer: Adam(learning_rate=lr_schedule)

| Num. of filters | Num. Trainable Params | AUC Train | AUC Test | Runtime (secs per epoch) |

|---|---|---|---|---|

| [2, 1] | 193 | 0.723 | 0.675 | ±0.268 |

| [4, 1] | 231 | 0.735 | 0.693 | ±0.268 |

| [6, 1] | 269 | 0.745 | 0.696 | ±0.268 |

| [8, 1] | 307 | 0.746 | 0.700 | ±0.268 |

| [4, 2] | 396 | 0.764 | 0.699 | ±0.268 |

| [4, 3] | 561 | 0.784 | 0.687 | ±0.268 |

-

lr_schedule

def lr_schedule(epoch): """Learning Rate Schedule Learning rate is scheduled to be reduced after 80, 120, 160, 180 epochs. Called automatically every epoch as part of callbacks during training. # Arguments epoch (int): The number of epochs # Returns lr (float32): learning rate """ lr = 1e-3 if epoch > 180: lr *= 0.5e-3 elif epoch > 160: lr *= 1e-3 elif epoch > 120: lr *= 1e-2 elif epoch > 80: lr *= 1e-1 print('Learning rate: ', lr) return lr # Both `lr_scheduler` and `lr_reducer` are used for `callbacks` argument of Keras' model training API lr_scheduler = tf.keras.callbacks.LearningRateScheduler(lr_schedule) lr_reducer = tf.keras.callbacks.ReduceLROnPlateau(factor=np.sqrt(0.1), cooldown=0, patience=5, min_lr=0.5e-6)

[5] LeCun Y, Cortes C. MNIST handwritten digit database 2010.

If you want to contribute to the code (e.g., adding new features, provide another example of usage in Jupyter Notebook, research results), please let me know by sending a pull request with a comprehensive PR note on new things that you added and the reasoning. I won't be too strict on it as the main goal of this project is more towards "research" rather than "code development". So, as long as it is clear and good enough, I will merge the PR.

You can also open a new issue or contact me if you want to discuss things first.

If you used the code and found any bugs/errors, or have any suggestions, critics, requests, etc., please let me know either by opening up a new issue or by contacting me.

Thank you!