Do you want to learn unsupervised representations of 3D scenes? Boy do I have the kick for you 🕍

Watch a video: https://vimeo.com/345774866

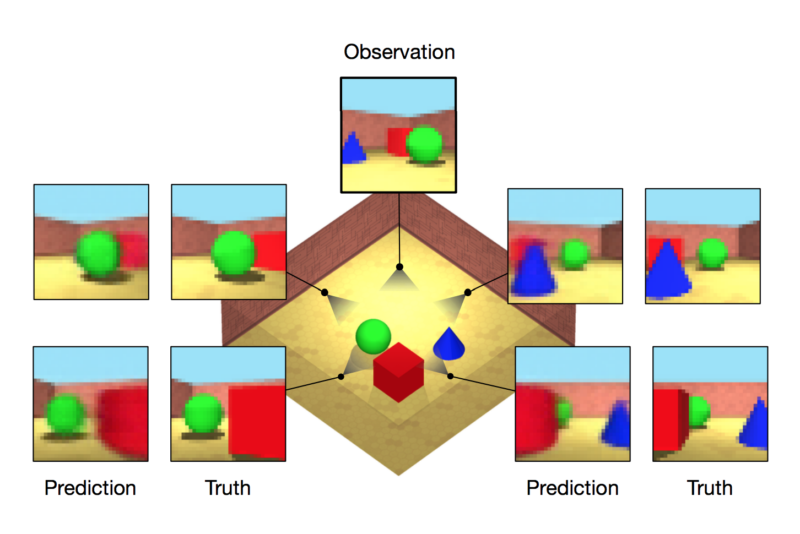

This is an implementation of Neural Scene Representation and Rendering (Eslami et al 2018).

The authors are all pretty cool: S. M. Ali Eslami, Danilo Jimenez Rezende, Frederic Besse, Fabio Viola, Ari S. Morcos, Marta Garnelo

Summary: If you haven't read the blog post already, you should! It's super cool, and will help you get a head start on what's here.

And before you commit to reading the rest of this document, please know that there exist other implementations of GQN out there! You've got choices!

- https://github.com/wohlert/generative-query-network-pytorch

- https://github.com/iShohei220/torch-gqn

- https://github.com/ogroth/tf-gqn (if you like Tensorflow)

This repository might look a bit intimidating, but I'm always here, and I'll try to make this README informative!

Steps:

- Getting the training data (to replicate the networks in the original paper)

- Training your

dragonneural nets - How to tell that it works (and exploring what it can do)

Steps 1 and 2 are optional (and more straightforward? Since the authors did all the hard work for us already by telling us how the net is built and so forth). I've trained a GQN for ~40k gradient steps (see /notes for more details), so there's checkpoints that you can use out of the box! You can skip to (3)

You should probably start by deciding what dataset to use. I used shepard_metzler_5_parts, but there are others: here. Personally I'd like to see one trained on rooms_ring_camera_with_object_rotations, but that's just me 😊

The scripts:

download_data.shcan help you download the data withgsutil, and put the data in a directorydata/tf2torch.pywill convert the.tfrecordfiles to a LOT of.pt.gzfiles, each one being one particular example. Other implementations (see wohlert) will do this differently (e.g. by making one big file instead of a lot of little ones)

Great! Now we have training data. When I was writing the various bits, I wrote the tests

test_datasets.pywill see if you have the ShepardMetzler dataset ready-to-gotest_building_blocks.pywill see if your neural net is OKtest_gqn.pywill run one step of training (load the data, load the model, etc). If this passes, you should be ready to scale to full training!

python run-gqn.py --data_parallel=True --batch_size=144 --workers=2

This will save backups every 1000 steps, and log changes to Tensorboard.

Note to self (and everyone): Add some tips about logging/keeping track of stuff during training!

fast forward a few hours, or days: Awesome! we have a checkpoint, and the images on Tensorboard look pretty good. What now?

What can a trained model do?

Almost anything, you just have to code stuff. 🌼You can:

- delete half the weights?

- watch the activations as an image passes through it

- compare the representations for different scenes

- make it rotate!!

Check out the /fun folder for my first attempts. Lots more to be done here, so please submit (many) a pull request with suggestions!

If I don't want to train my own model, can I grab the checkpoints that you have?

Sure, right here.

Why do the images in fun/Interactive.ipynb suck?

I only trained it for about 40k gradient steps (each one from a mini-batch of 36 scenes). I think the paper had like... 2 million gradient steps? The 5k Gradient Steps More Fund

Help, something happened!

IS IT A MURDERFISH?!! ....... if not, I made a help document, see if that helps?

Need lots of help on this! If you're a researcher, graphic designer, student, garden cat, aspiring engineer, auto mech, you name it: chances are, you spot something that you like/don't like/want this to do/can fix! Open a pull request!

Some ideas:

- I want this to be more accessible. This could take the form of visualizations, code comments, tutorial/guides, chat group? Hmm.

- If anyone is interested in starting a joint fund for people to learn and train AI models, I'm down.

- Train the model (even 1 epoch helps!) and contribute the checkpoints to the world!

- Analysis is laaaacking. Add more things to the

funfolder! - Experiment with different domains!

It's yours :) You wrote it. Now take good care of it