By using Dentalkart Scraper, you agree to comply with all applicable local and international laws related to data scraping, copyright, and privacy. The developers of Dentalkart Scraper will not be held liable for any misuse of this software. It is the user's sole responsibility to ensure adherence to all relevant laws regarding data scraping, copyright, and privacy, and to use Dentalkart Scraper in an ethical and legal manner, in line with both local and international regulations.

We take concerns related to the Dentalkart Scraper Project very seriously. If you have any inquiries or issues, please contact Chetan Jain at chetan@omkar.cloud. We will take prompt and necessary action in response to your emails.

🌟 Scrape DentalKart Products! 🤖

This scraper is designed to help you download DentalKart Products.

1️⃣ Clone the Magic 🧙♀️:

git clone https://github.com/omkarcloud/dentalkart-scraper

cd dentalkart-scraper2️⃣ Install Dependencies 📦:

python -m pip install -r requirements.txt3️⃣ Let the Rain of DentalKart Products Begin 😎:

python main.pyOnce the scraping process is complete, you can find your DentalKart Products in the output/finished.csv.

Yes, you can. The scraper will resume from where it left off if you interrupt the process.

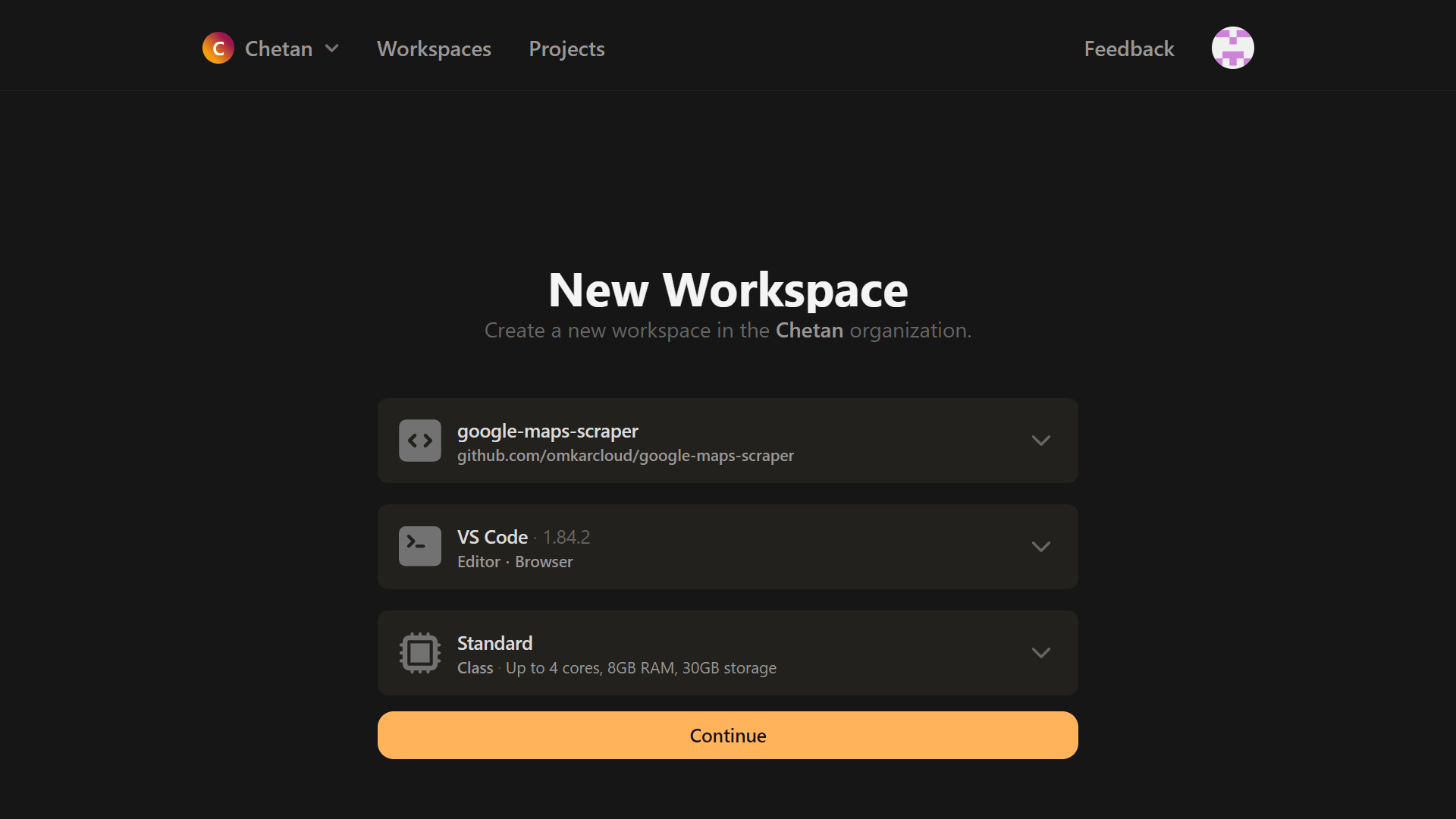

You can easily run the scraper in Gitpod, a browser-based development environment. Set it up in just 5 minutes by following these steps:

-

Visit this link and sign up using your GitHub account.

-

Once Signed Up, Open it in Gitpod.

-

In the terminal, run the following command to start scraping:

python main.py

-

Once the scraper has finished running, you can download the data from

outputfolder.

Also, it's important to regularly interact with the Gitpod environment, such as clicking within it every 30 minutes, to keep the machine active and prevent automatic shutdown.

If you don't want to click every 30 minutes, then we encourage to install Python on PC and run the scraper locally.

Love It? Star It ⭐!

Become one of our amazing stargazers by giving us a star ⭐ on GitHub!

It's just one click, but it means the world to me.