Releases: open-mmlab/mmagic

MMagic v1.2.0 Release

Highlights

- An advanced and powerful inpainting algorithm named PowerPaint is released in our repository. Click to View

New Features & Improvements

- [Release] Post release for v1.1.0 by @liuwenran in #2043

- [CodeCamp2023-645]Add dreambooth new cfg by @YanxingLiu in #2042

- [Enhance] add new config for base dir by @liuwenran in #2053

- [Enhance] support using from_pretrained for instance_crop by @zengyh1900 in #2066

- [Enhance] update support for latest diffusers with lora by @zengyh1900 in #2067

- [Feature] PowerPaint by @zhuang2002 in #2076

- [Enhance] powerpaint improvement by @liuwenran in #2078

- [Enhance] Improve powerpaint by @liuwenran in #2080

- [Enhance] add outpainting to gradio_PowerPaint.py by @zhuang2002 in #2084

- [MMSIG] Add new configuration files for StyleGAN2 by @xiaomile in #2057

- [MMSIG] [Doc] Update data_preprocessor.md by @jinxianwei in #2055

- [Enhance] Enhance PowerPaint by @zhuang2002 in #2093

Bug Fixes

- [Fix] Update README.md by @eze1376 in #2048

- [Fix] Fix test tokenizer by @liuwenran in #2050

- [Fix] fix readthedocs building by @liuwenran in #2052

- [Fix] --local-rank for PyTorch >= 2.0.0 by @youqingxiaozhua in #2051

- [Fix] animatediff download from openxlab by @JianxinDong in #2061

- [Fix] fix best practice by @liuwenran in #2063

- [Fix] try import expand mask from transformers by @zengyh1900 in #2064

- [Fix] Update diffusers to v0.23.0 by @liuwenran in #2069

- [Fix] add openxlab link to powerpaint by @liuwenran in #2082

- [Fix] Update swinir_x2s48w8d6e180_8xb4-lr2e-4-500k_div2k.py, use MultiValLoop. by @ashutoshsingh0223 in #2085

- [Fix] Fix a test expression that has a logical short circuit. by @munahaf in #2046

- [Fix] Powerpaint to load safetensors by @sdbds in #2088

New Contributors

- @eze1376 made their first contribution in #2048

- @youqingxiaozhua made their first contribution in #2051

- @JianxinDong made their first contribution in #2061

- @zhuang2002 made their first contribution in #2076

- @ashutoshsingh0223 made their first contribution in #2085

- @jinxianwei made their first contribution in #2055

- @munahaf made their first contribution in #2046

- @sdbds made their first contribution in #2088

Full Changelog: v1.1.0...v1.2.0

MMagic v1.1.0 Release

Highlights

In this new version of MMagic, we have added support for the following five new algorithms.

- Support ViCo, a new SD personalization method. Click to View

- Support AnimateDiff, a popular text2animation method. Click to View

- Support SDXL. Click to View

- Support DragGAN implementation with MMagic. Click to View

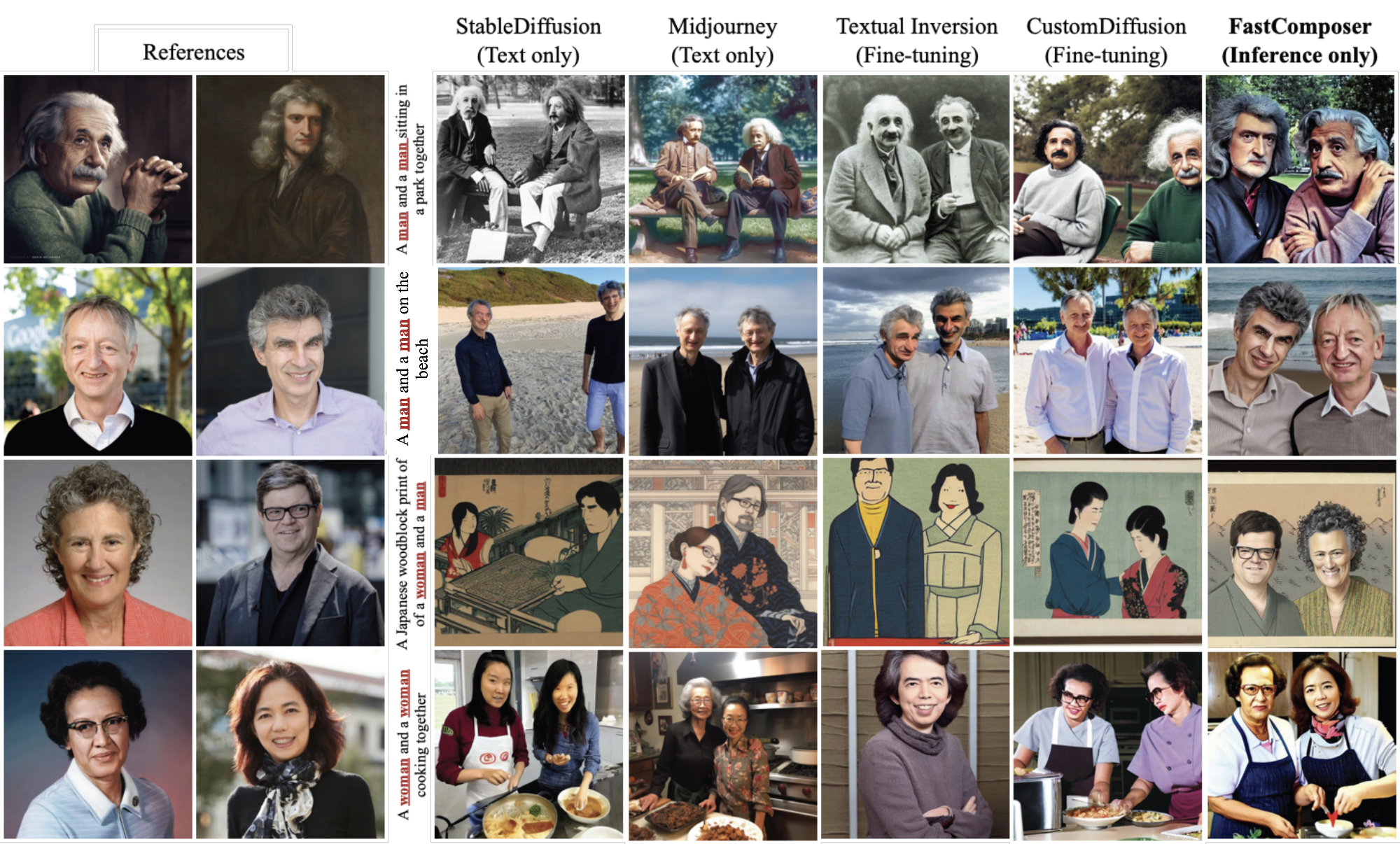

- Support for FastComposer. Click to View

New Features & Improvements

- [Feature] Support inference with diffusers pipeline, sd_xl first. by @liuwenran in #2023

- [Enhance] add negative prompt for sd inferencer by @liuwenran in #2021

- [Enhance] Update flake8 checking config in setup.cfg by @LeoXing1996 in #2007

- [Enhance] Add ‘config_name' as a supplement to the 'model_setting' by @liuwenran in #2027

- [Enhance] faster test by @okotaku in #2034

- [Enhance] Add OpenXLab Badge by @ZhaoQiiii in #2037

CodeCamp Contributions

- [CodeCamp2023-643] Add new configs of BigGAN by @limafang in #2003

- [CodeCamp2023-648] MMagic new config GuidedDiffusion by @ooooo-create in #2005

- [CodeCamp2023-649] MMagic new config Instance Colorization by @ooooo-create in #2010

- [CodeCamp2023-652] MMagic new config StyleGAN3 by @hhy150 in #2018

- [CodeCamp2023-653] Add new configs of Real BasicVSR by @RangeKing in #2030

Bug Fixes

- [Fix] Fix best practice and back to contents on mainpage, add new models to model zoo by @liuwenran in #2001

- [Fix] Check CI error and remove main stream gpu test by @liuwenran in #2013

- [Fix] Check circle ci memory by @liuwenran in #2016

- [Fix] remove code and fix clip loss ut test by @liuwenran in #2017

- [Fix] mock infer in diffusers pipeline inferencer ut. by @liuwenran in #2026

- [Fix] Fix bug caused by merging draggan by @liuwenran in #2029

- [Fix] Update QRcode by @crazysteeaam in #2009

- [Fix] Replace the download links in README with OpenXLab version by @FerryHuang in #2038

- [Fix] Increase docstring coverage by @liuwenran in #2039

New Contributors

- @limafang made their first contribution in #2003

- @ooooo-create made their first contribution in #2005

- @hhy150 made their first contribution in #2018

- @ZhaoQiiii made their first contribution in #2037

- @ElliotQi made their first contribution in #1980

- @Beaconsyh08 made their first contribution in #2012

Full Changelog: v1.0.2...v1.1.0

MMagic v1.0.2 Release

Highlights

1. More detailed documentation

Thank you to the community contributors for helping us improve the documentation. We have improved many documents, including both Chinese and English versions. Please refer to the documentation for more details.

2. New algorithms

- Support Prompt-to-prompt, DDIM Inversion and Null-text Inversion. Click to View.

From right to left: origin image, DDIM inversion, Null-text inversion

Prompt-to-prompt Editing

- Support Textual Inversion. Click to view.

- Support Attention Injection for more stable video generation with controlnet. Click to view.

- Support Stable Diffusion Inpainting. Click to view.

New Features & Improvements

- [Enhancement] Support noise offset in stable diffusion training by @LeoXing1996 in #1880

- [Community] Support Glide Upsampler by @Taited in #1663

- [Enhance] support controlnet inferencer by @Z-Fran in #1891

- [Feature] support Albumentations augmentation transformations and pipeline by @Z-Fran in #1894

- [Feature] Add Attention Injection for unet by @liuwenran in #1895

- [Enhance] update benchmark scripts by @Z-Fran in #1907

- [Enhancement] update mmagic docs by @crazysteeaam in #1920

- [Enhancement] Support Prompt-to-prompt, ddim inversion and null-text inversion by @FerryHuang in #1908

- [CodeCamp2023-302] Support MMagic visualization and write a user guide by @aptsunny in #1939

- [Feature] Support Textual Inversion by @LeoXing1996 in #1822

- [Feature] Support stable diffusion inpaint by @Taited in #1976

- [Enhancement] Adopt

BaseModulefor some models by @LeoXing1996 in #1543 - [MMSIG]支持 DeblurGANv2 inference by @xiaomile in #1955

- [CodeCamp2023-647] Add new configs of EG3D by @RangeKing in #1985

Bug Fixes

- Fix dtype error in StableDiffusion and DreamBooth training by @LeoXing1996 in #1879

- Fix gui VideoSlider bug by @Z-Fran in #1885

- Fix init_model and glide demo by @Z-Fran in #1888

- Fix InstColorization bug when dim=3 by @Z-Fran in #1901

- Fix sd and controlnet fp16 bugs by @Z-Fran in #1914

- Fix num_images_per_prompt in controlnet by @LeoXing1996 in #1936

- Revise metafile for sd-inpainting to fix inferencer init by @LeoXing1996 in #1995

New Contributors

- @wyyang23 made their first contribution in #1886

- @yehuixie made their first contribution in #1912

- @crazysteeaam made their first contribution in #1920

- @BUPT-NingXinyu made their first contribution in #1921

- @zhjunqin made their first contribution in #1918

- @xuesheng1031 made their first contribution in #1923

- @wslgqq277g made their first contribution in #1934

- @LYMDLUT made their first contribution in #1933

- @RangeKing made their first contribution in #1930

- @xin-li-67 made their first contribution in #1932

- @chg0901 made their first contribution in #1931

- @aptsunny made their first contribution in #1939

- @YanxingLiu made their first contribution in #1943

- @tackhwa made their first contribution in #1937

- @Geo-Chou made their first contribution in #1940

- @qsun1 made their first contribution in #1956

- @ththth888 made their first contribution in #1961

- @sijiua made their first contribution in #1967

- @MING-ZCH made their first contribution in #1982

- @AllYoung made their first contribution in #1996

MMagic v1.0.1 Release

New Features & Improvements

- Support tomesd for StableDiffusion speed-up. #1801

- Support all inpainting/matting/image restoration models inferencer. #1833, #1873

- Support animated drawings at projects. #1837

- Support Style-Based Global Appearance Flow for Virtual Try-On at projects. #1786

- Support tokenizer wrapper and support EmbeddingLayerWithFixe. #1846

Bug Fixes

- Fix install requirements. #1819

- Fix inst-colorization PackInputs. #1828, #1827

- Fix inferencer in pip-install. #1875

New Contributors

MMagic v1.0.0 Release

We are excited to announce the release of MMagic v1.0.0 that inherits from MMEditing and MMGeneration.

Since its inception, MMEditing has been the preferred algorithm library for many super-resolution, editing, and generation tasks, helping research teams win more than 10 top international competitions and supporting over 100 GitHub ecosystem projects. After iterative updates with OpenMMLab 2.0 framework and merged with MMGeneration, MMEditing has become a powerful tool that supports low-level algorithms based on both GAN and CNN.

Today, MMEditing embraces Generative AI and transforms into a more advanced and comprehensive AIGC toolkit: MMagic (Multimodal Advanced, Generative, and Intelligent Creation).

In MMagic, we have supported 53+ models in multiple tasks such as fine-tuning for stable diffusion, text-to-image, image and video restoration, super-resolution, editing and generation. With excellent training and experiment management support from MMEngine, MMagic will provide more agile and flexible experimental support for researchers and AIGC enthusiasts, and help you on your AIGC exploration journey. With MMagic, experience more magic in generation! Let's open a new era beyond editing together. More than Editing, Unlock the Magic!

Highlights

1. New Models

We support 11 new models in 4 new tasks.

- Text2Image / Diffusion

- ControlNet

- DreamBooth

- Stable Diffusion

- Disco Diffusion

- GLIDE

- Guided Diffusion

- 3D-aware Generation

- EG3D

- Image Restoration

- NAFNet

- Restormer

- SwinIR

- Image Colorization

- InstColorization

mmagic_introduction.mp4

2. Magic Diffusion Model

For the Diffusion Model, we provide the following "magic" :

-

Support image generation based on Stable Diffusion and Disco Diffusion.

-

Support Finetune methods such as Dreambooth and DreamBooth LoRA.

-

Support controllability in text-to-image generation using ControlNet.

-

Support acceleration and optimization strategies based on xFormers to improve training and inference efficiency.

-

Support video generation based on MultiFrame Render.

MMagic supports the generation of long videos in various styles through ControlNet and MultiFrame Render.

prompt keywords: a handsome man, silver hair, smiling, play basketballcaixukun_dancing_begin_fps10_frames_cat.mp4

prompt keywords: a girl, black hair, white pants, smiling, play basketball

caixukun_dancing_begin_fps10_frames_girl_boycat.mp4

prompt keywords: a handsome man

zhou_woyangni_fps10_frames_resized_cat.mp4

-

Support calling basic models and sampling strategies through DiffuserWrapper.

-

SAM + MMagic = Generate Anything!

SAM (Segment Anything Model) is a popular model these days and can also provide more support for MMagic! If you want to create your own animation, you can go to OpenMMLab PlayGround.huangbo_fps10_playground_party_fixloc_cat.mp4

3. Upgraded Framework

To improve your "spellcasting" efficiency, we have made the following adjustments to the "magic circuit":

- By using MMEngine and MMCV of OpenMMLab 2.0 framework, We decompose the editing framework into different modules and one can easily construct a customized editor framework by combining different modules. We can define the training process just like playing with Legos and provide rich components and strategies. In MMagic, you can complete controls on the training process with different levels of APIs.

- Support for 33+ algorithms accelerated by Pytorch 2.0.

- Refactor DataSample to support the combination and splitting of batch dimensions.

- Refactor DataPreprocessor and unify the data format for various tasks during training and inference.

- Refactor MultiValLoop and MultiTestLoop, supporting the evaluation of both generation-type metrics (e.g. FID) and reconstruction-type metrics (e.g. SSIM), and supporting the evaluation of multiple datasets at once.

- Support visualization on local files or using tensorboard and wandb.

New Features & Improvements

- Support 53+ algorithms, 232+ configs, 213+ checkpoints, 26+ loss functions, and 20+ metrics.

- Support controlnet animation and Gradio gui. Click to view.

- Support Inferencer and Demo using High-level Inference APIs. Click to view.

- Support Gradio gui of Inpainting inference. Click to view.

- Support qualitative comparison tools. Click to view.

- Enable projects. Click to view.

- Improve converters scripts and documents for datasets. Click to view.

MMEditing v1.0.0rc7 Release

Highlights

We are excited to announce the release of MMEditing 1.0.0rc7. This release supports 51+ models, 226+ configs and 212+ checkpoints in MMGeneration and MMEditing. We highlight the following new features

- Support DiffuserWrapper

- Support ControlNet (training and inference).

- Support PyTorch 2.0.

New Features & Improvements

- Support DiffuserWrapper. #1692

- Support ControlNet (training and inference). #1744

- Support PyTorch 2.0 (successfully compile 33+ models on 'inductor' backend). #1742

- Support Image Super-Resolution and Video Super-Resolution models inferencer. #1662, #1720

- Refactor tools/get_flops script. #1675

- Refactor dataset_converters and documents for datasets. #1690

- Move stylegan ops to MMCV. #1383

Bug Fixes

Contributors

A total of 8 developers contributed to this release.

Thanks @LeoXing1996, @Z-Fran, @plyfager, @zengyh1900, @liuwenran, @ryanxingql, @HAOCHENYE, @VongolaWu

New Contributors

- @HAOCHENYE made their first contribution in #1712

Full Changelog: v1.0.0rc6...v1.0.0rc7

MMEditing v1.0.0rc6 Release

Highlights

We are excited to announce the release of MMEditing 1.0.0rc6. This release supports 50+ models, 222+ configs and 209+ checkpoints in MMGeneration and MMEditing. We highlight the following new features

- Support Gradio gui of Inpainting inference.

- Support Colorization, Translationin and GAN models inference.

Backwards Incompatible changes

GenValLoopandMultiValLoophas been merged toEditValLoop,GenTestLoopandMultiTestLoophas been merged toEditTestLoop. Use case:

Case 1: metrics on a single dataset

>>> # add the following lines in your config

>>> # 1. use `EditValLoop` instead of `ValLoop` in MMEngine

>>> val_cfg = dict(type='EditValLoop')

>>> # 2. specific EditEvaluator instead of Evaluator in MMEngine

>>> val_evaluator = dict(

>>> type='EditEvaluator',

>>> metrics=[

>>> dict(type='PSNR', crop_border=2, prefix='Set5'),

>>> dict(type='SSIM', crop_border=2, prefix='Set5'),

>>> ])

>>> # 3. define dataloader

>>> val_dataloader = dict(...)

Case 2: different metrics on different datasets

>>> # add the following lines in your config

>>> # 1. use `EditValLoop` instead of `ValLoop` in MMEngine

>>> val_cfg = dict(type='EditValLoop')

>>> # 2. specific a list EditEvaluator

>>> # do not forget to add prefix for each metric group

>>> div2k_evaluator = dict(

>>> type='EditEvaluator',

>>> metrics=dict(type='SSIM', crop_border=2, prefix='DIV2K'))

>>> set5_evaluator = dict(

>>> type='EditEvaluator',

>>> metrics=[

>>> dict(type='PSNR', crop_border=2, prefix='Set5'),

>>> dict(type='SSIM', crop_border=2, prefix='Set5'),

>>> ])

>>> # define evaluator config

>>> val_evaluator = [div2k_evaluator, set5_evaluator]

>>> # 3. specific a list dataloader for each metric groups

>>> div2k_dataloader = dict(...)

>>> set5_dataloader = dict(...)

>>> # define dataloader config

>>> val_dataloader = [div2k_dataloader, set5_dataloader]- Support

stackandsplitforEditDataSample, Use case:

# Example for `split`

gen_sample = EditDataSample()

gen_sample.fake_img = outputs # tensor

gen_sample.noise = noise # tensor

gen_sample.sample_kwargs = deepcopy(sample_kwargs) # dict

gen_sample.sample_model = sample_model # string

# set allow_nonseq_value as True to copy non-sequential data (sample_kwargs and sample_model for this example)

batch_sample_list = gen_sample.split(allow_nonseq_value=True)

# Example for `stack`

data_sample1 = EditDataSample()

data_sample1.set_gt_label(1)

data_sample1.set_tensor_data({'img': torch.randn(3, 4, 5)})

data_sample1.set_data({'mode': 'a'})

data_sample1.set_metainfo({

'channel_order': 'rgb',

'color_flag': 'color'

})

data_sample2 = EditDataSample()

data_sample2.set_gt_label(2)

data_sample2.set_tensor_data({'img': torch.randn(3, 4, 5)})

data_sample2.set_data({'mode': 'b'})

data_sample2.set_metainfo({

'channel_order': 'rgb',

'color_flag': 'color'

})

data_sample_merged = EditDataSample.stack([data_sample1, data_sample2])-

GenDataPreprocessorhas been merged intoEditDataPreprocessor,- No changes are required other than changing the

typefield in config. - Users do not need to define

input_viewandoutput_viewsince we will infer the shape of mean automatically. - In evaluation stage, all tensors will be converted to

BGR(for three-channel images) and[0, 255].

- No changes are required other than changing the

-

PixelDatahas been removed. -

For BaseGAN/CondGAN models, real images are passed from

data_samples.gt_imginstead ofinputs['img'].

New Features & Improvements

- Refactor FileIO. #1572

- Refactor registry. #1621

- Refactor Random degradations. #1583

- Refactor DataSample, DataPreprocessor, Metric and Loop. #1656

- Use mmengine.basemodule instead of nn.module. #1491

- Refactor Main Page. #1609

- Support Gradio gui of Inpainting inference. #1601

- Support Colorization inferencer. #1588

- Support Translation models inferencer. #1650

- Support GAN models inferencer. #1653, #1659

- Print config tool. #1590

- Improve type hints. #1604

- Update Chinese documents of metrics and datasets. #1568, #1638

- Update Chinese documents of BigGAN and Disco-Diffusion. #1620

- Update Evaluation and README of Guided-Diffusion. #1547

Bug Fixes

- Fix the meaning of

momentumin EMA. #1581 - Fix output dtype of RandomNoise. #1585

- Fix pytorch2onnx tool. #1629

- Fix API documents. #1641, #1642

- Fix loading RealESRGAN EMA weights. #1647

- Fix arg passing bug of dataset_converters scripts. #1648

Contributors

A total of 17 developers contributed to this release.

Thanks @plyfager, @LeoXing1996, @Z-Fran, @zengyh1900, @VongolaWu, @liuwenran, @austinmw, @dienachtderwelt, @liangzelong, @i-aki-y, @xiaomile, @Li-Qingyun, @vansin, @Luo-Yihang, @ydengbi, @ruoningYu, @triple-Mu

New Contributors

- @dienachtderwelt made their first contribution in #1578

- @i-aki-y made their first contribution in #1590

- @triple-Mu made their first contribution in #1618

- @Li-Qingyun made their first contribution in #1640

- @Luo-Yihang made their first contribution in #1648

- @ydengbi made their first contribution in #1557

Full Changelog: v1.0.0rc5...v1.0.0rc6

MMEditing v0.16.1 Release

New Features & Improvements

Bug Fixes

- Fix the bug of TTSR configuration file. #1435

- Fix RealESRGAN test dataset. #1489

- Fix dump config in train scrips. #1584

- Fix dynamic exportable ONNX of

pixel-unshuffle. #1637

Contributors

A total of 10 developers contributed to this release.

Thanks @LeoXing1996, @Z-Fran, @zengyh1900, @liuky74, @KKIEEK, @zeakey, @Sqhttwl, @yhna940, @gihwan-kim, @vansin

New Contributors

- @liuky74 made their first contribution in #1435

- @KKIEEK made their first contribution in #775

- @zeakey made their first contribution in #1584

- @Sqhttwl made their first contribution in #1627

- @yhna940 made their first contribution in #1637

- @gihwan-kim made their first contribution in #1510

Full Changelog: v0.16.0...0.16.1

MMEditing v1.0.0rc5 Release

Highlights

We are excited to announce the release of MMEditing 1.0.0rc5. This release supports 49+ models, 180+ configs and 177+ checkpoints in MMGeneration and MMEditing. We highlight the following new features

- Support Restormer

- Support GLIDE

- Support SwinIR

- Support Stable Diffusion

New Features & Improvements

- Disco notebook.(#1507)

- Revise test requirements and CI.(#1514)

- Recursive generate summary and docstring.(#1517)

- Enable projects.(#1526)

- Support mscoco dataset.(#1520)

- Improve Chinese documents.(#1532)

- Type hints.(#1481)

- Update download link.(#1554)

- Update deployment guide.(#1551)

Bug Fixes

- Fix documentation link checker.(#1522)

- Fix ssim first channel bug.(#1515)

- Fix restormer ut.(#1550)

- Fix extract_gt_data of realesrgan.(#1542)

- Fix model index.(#1559)

- Fix config path in disco-diffusion.(#1553)

- Fix text2image inferencer.(#1523)

Contributors

A total of 16 developers contributed to this release.

Thanks @plyfager, @LeoXing1996, @Z-Fran, @zengyh1900, @VongolaWu, @liuwenran, @AlexZou14, @lvhan028, @xiaomile, @ldr426, @austin273, @whu-lee, @willaty, @curiosity654, @Zdafeng, @Taited

New Contributors

- @xiaomile made their first contribution in #1481

- @ldr426 made their first contribution in #1542

- @austin273 made their first contribution in #1553

- @whu-lee made their first contribution in #1539

- @willaty made their first contribution in #1541

- @curiosity654 made their first contribution in #1556

- @Zdafeng made their first contribution in #1476

- @Taited made their first contribution in #1534

Full Changelog: v1.0.0rc4...v1.0.0rc5

Bump to version V1.0.0rc4

v1.0.0rc4 (06/12/2022)

Highlights

We are excited to announce the release of MMEditing 1.0.0rc4. This release supports 45+ models, 176+ configs and 175+ checkpoints in MMGeneration and MMEditing. We highlight the following new features

- Support High-level APIs.

- Support diffusion models.

- Support Text2Image Task.

- Support 3D-Aware Generation.

New Features & Improvements

- Refactor high-level APIs. (#1410)

- Support disco-diffusion text-2-image. (#1234, #1504)

- Support EG3D. (#1482, #1493, #1494, #1499)

- Support NAFNet model. (#1369)

Bug Fixes

- fix srgan train config. (#1441)

- fix cain config. (#1404)

- fix rdn and srcnn train configs. (#1392)

- Revise config and pretrain model loading in esrgan. (#1407)

Contributors

A total of 14 developers contributed to this release.

Thanks @plyfager, @LeoXing1996, @Z-Fran, @zengyh1900, @VongolaWu, @gaoyang07, @ChangjianZhao, @zxczrx123, @jackghosts, @liuwenran, @CCODING04, @RoseZhao929, @shaocongliu, @liangzelong.

New Contributors

- @gaoyang07 made their first contribution in #1372

- @ChangjianZhao made their first contribution in #1461

- @zxczrx123 made their first contribution in #1462

- @jackghosts made their first contribution in #1463

- @liuwenran made their first contribution in #1410

- @CCODING04 made their first contribution in #783

- @RoseZhao929 made their first contribution in #1474

- @shaocongliu made their first contribution in #1470

- @liangzelong made their first contribution in #1488