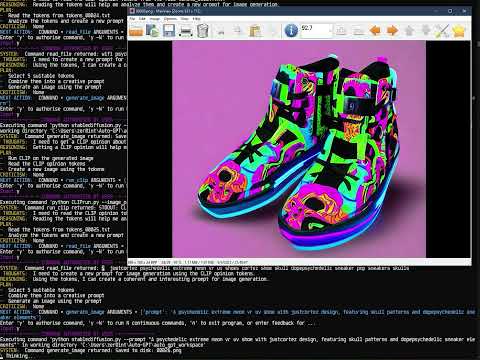

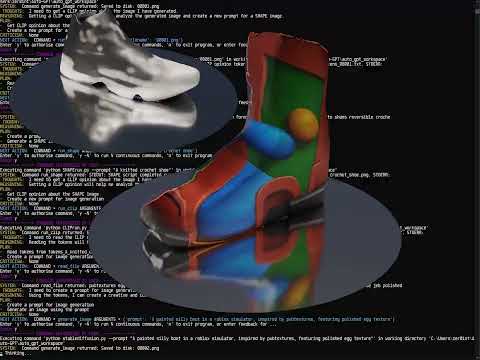

(We're essentially just prompting GPT with CLIP's "opinion" tokens for what it "sees" in an image; which is surprisingly effective, nevertheless!)

- GPT autonomous infinite variation prompting of text-to-image and text-to-3D

- GPT visual websearch based on image content

- your_idea_here

Please tag me on Twitter: @zer0int1 if you use this for anything; I'd love to see it!

Depending on your use case, you might also need:

- ✔️ Shap-E by OpenAI (Text-to-3D)

- ✔️ Stable Diffusion by Stability-AI (Text-to-Image, for commandline & running local as implemented in this repo)

Other third-party credits:

- CLIP Gradient Ascent: Adaptation of the original notebook "Closed Test Ascending CLIPtext" by @advadnoun

- CLIP GradCAM: https://github.com/kevinzakka/clip_playground

- Ensure prequisites (above repos) are installed and working

- Put (git-clone, download-zip-extract) this in your C:/User/JohnDoe or equivalent "user home" folder ("z" is no longer a null byte, I removed it, so your username can be with a "z", like mine)

- Edit Auto-GPT/auto_gpt_workspace/CLIP.py according to your hardware (VRAM requirement table inside; yes you can run a small CLIP with 6 GB of VRAM!)

- From Auto-GPT/auto_gpt_workspace, copy CLIP.py and (if applicable) SHAPE.py to your C:/User/JohnDoe (one level above the Auto-GPT folder)

- Edit Auto-GPT/autogpt/visionconfig.py (Should be straightforward and self-explanatory, define the absolute paths on your local system in this config)

- In your .env, remove the comment and set EXECUTE_LOCAL_COMMANDS=True and RESTRICT_TO_WORKSPACE=False

- Pick one of the .yaml files from Auto-GPT/ai_settings_examples (see README.TXT for details!), copy it to the main Auto-GPT folder and rename it, so you have e.g. C:/User/JohnDoe/Auto-GPT/ai_settings.yaml

- (Optional) put your own images (filenames as per the ai_settings.yaml) in the Auto-GPT/images folder (or use the example images I provided)

- (Optional, case: running local stable diffusion): Edit Auto-GPT/auto_gpt_workspace/stablediffusion.py to match the model / config of SD you want to use

- (Optional, recommended) Make sure that everything is working by running the scripts independently outside of Auto-GPT (see below)

python CLIPrun.py --image_path "C:/Users/JohnDoe/Auto-GPT/images/0001.png"Replace JohnDoe with YourUserName, wait a minute or two (depending on GPU and settings you made in CLIP.py) -> You should now have a CLIP opinion as tokens_0001.txt in Auto-GPT/auto_gpt_workspace

Optional:

python SHAPErun.py --prompt "A Pontiac Firebird car"You should find s_000.png and the respective .ply in the Auto-GPT/images folder. When you run this FOR THE FIRST TIME EVER, it will download & build the Shap-E models in Auto-GPT/auto_gpt_workspace/shap_e_model_cache --> Please be patient (minutes, depending on your internet speed)!!

🤖👀 BONUS: See what CLIP sees by computing (fast!) a heatmap highlighting which regions in the image activate the most to a given caption.

python manual_gradcam.py --image "0001.png" --txt "tokens_0001.txt"Caption = tokens CLIP 'saw' in the image (returned "opinion" tokens_XXXXX.txt of GPT using "run_clip" on XXXXX.png in Auto-GPT) If you're wondering WTF CLIP saw in your image, and where - run this in a seperate command prompt "on the side" and according to what GPT last used in Auto-GPT. Will dump heatmap images for all CLIP tokens of all four saliency layers of the CLIP model in the Auto-GPT/GradCAM folder. For GradCAM requirements, see Auto-GPT/autogpt/commands/CLIP_gradcam.py -- adaptation of an ipynb notebook, pip install requirements left in as comments at the very top

ToDo: Implement as "y -D" option that Auto-GPT accepts, same as "y -N", to execute after the next time run_clip is executed.

-

Oddly enough, the relative output .\auto_gpt_workspace\clip_tokens.txt will ensure GPT-3.5 does not get confused and not knowing where CLIP token "opinion" is. GPT-4, however, will once try to read_file from the wrong place in the beginning. Simply approve with y, AI will "think" about file-not-found, correct itself, and never make that mistake again. Sorry about a small waste of GPT-4 tokens - but this is the best way to make sure it works out of the box for both GPT models.

-

Make sure the folder structure is exactly as mentioned above. It's a delicate thing with executing subprocesses (.py files) from different locations.

-

Delete the auto-gpt.json in Auto-GPT/auto_gpt_workspace if you change the .yaml or encounter issues.

-

For local stable diffusion: I am using shutil.copyfile instead of shutil.move, meaning, I am trashing up your stablediffusion/outputs folder as I copy the images to Auto-GPT/images. Why? Because I couldn't figure out a way that will ONLY check for existing stable diffusion images, e.g. 00001.png but NOT 0001.png. Naming the files slightly more complex, like SD_00001.png, instead confuses GPT-3.5. So: Better have a bit of redundancy trash than files overwritten, right? Feel free to implement something that works, if you know how - I'd be delighted!

- CLIP SEES WHATEVER CLIP WANTS TO SEE (doesn't have to be related to what you see) 🤯

While you probably shouldn't run Auto-GPT in "autonomous" mode, anyway, you'll probably also want to ACTUALLY proof-read the GPT-generated prompt ❗❗ carefully rather than just approving it! That is especially the case if you are not running local, and spamming offensive words might just get you banned from a text-to-image API ❗❗

So a harmless image (your opinion) might lead to offensive, racist, biased, sexist output (CLIP opinion) ❗. Especially true if non-English text is present in the image.

- 👉 More info on typographic attacks and why CLIP is so obsessed with text: Multimodal Neurons

- 👉 Check the model-card.md and heed the warnings from OpenAI: CLIP Model Card

Use the above CLIPrun.py with pepe.png for an example that shouldn't be too toxic, but proves a point with regard to "oh yes, CLIP knows - CLIP was trained on the internet".

PS: And yes, GPT-3.5 / GPT-4 will accept these terms and make a prompt with them. They might conclude "the CLIP opinion is not very useful" and try to do something else; however, the AI can be persuaded to "use the CLIP tokens to make a prompt for run_image" via user feedback, and will then only refrain from using blatantly offensive words like "r*pe". However, CLIP opinion often includes chained "longword" tokens, like e.g. "instarape" - which GPT accepts, and that will in turn be understood by the CLIP inside stable diffusion et al just as well. ...And likely by an API filter, too.

You have been warned. Do whatever floats your boat, but keep it limited to your boat - and don't blame me for getting kick-banned from any text-to-image API. That's all. ❗

Download the latest stable release from here: https://github.com/Significant-Gravitas/Auto-GPT/releases/latest.

The master branch may often be in a broken state.

Auto-GPT is an experimental open-source application showcasing the capabilities of the GPT-4 language model. This program, driven by GPT-4, chains together LLM "thoughts", to autonomously achieve whatever goal you set. As one of the first examples of GPT-4 running fully autonomously, Auto-GPT pushes the boundaries of what is possible with AI.

AutoGPTDemo_Subs_WithoutFinalScreen.mp4

Demo made by Blake Werlinger

If you can spare a coffee, you can help to cover the costs of developing Auto-GPT and help to push the boundaries of fully autonomous AI! Your support is greatly appreciated. Development of this free, open-source project is made possible by all the contributors and sponsors. If you'd like to sponsor this project and have your avatar or company logo appear below click here.

- 🌐 Internet access for searches and information gathering

- 💾 Long-term and short-term memory management

- 🧠 GPT-4 instances for text generation

- 🔗 Access to popular websites and platforms

- 🗃️ File storage and summarization with GPT-3.5

- 🔌 Extensibility with Plugins

- Check out the wiki

- Get an OpenAI API Key

- Download the latest release

- Follow the installation instructions

- Configure any additional features you want, or install some plugins

- Run the app

Please see the documentation for full setup instructions and configuration options.

This experiment aims to showcase the potential of GPT-4 but comes with some limitations:

- Not a polished application or product, just an experiment

- May not perform well in complex, real-world business scenarios. In fact, if it actually does, please share your results!

- Quite expensive to run, so set and monitor your API key limits with OpenAI!

This project, Auto-GPT, is an experimental application and is provided "as-is" without any warranty, express or implied. By using this software, you agree to assume all risks associated with its use, including but not limited to data loss, system failure, or any other issues that may arise.

The developers and contributors of this project do not accept any responsibility or liability for any losses, damages, or other consequences that may occur as a result of using this software. You are solely responsible for any decisions and actions taken based on the information provided by Auto-GPT.

Please note that the use of the GPT-4 language model can be expensive due to its token usage. By utilizing this project, you acknowledge that you are responsible for monitoring and managing your own token usage and the associated costs. It is highly recommended to check your OpenAI API usage regularly and set up any necessary limits or alerts to prevent unexpected charges.

As an autonomous experiment, Auto-GPT may generate content or take actions that are not in line with real-world business practices or legal requirements. It is your responsibility to ensure that any actions or decisions made based on the output of this software comply with all applicable laws, regulations, and ethical standards. The developers and contributors of this project shall not be held responsible for any consequences arising from the use of this software.

By using Auto-GPT, you agree to indemnify, defend, and hold harmless the developers, contributors, and any affiliated parties from and against any and all claims, damages, losses, liabilities, costs, and expenses (including reasonable attorneys' fees) arising from your use of this software or your violation of these terms.

Stay up-to-date with the latest news, updates, and insights about Auto-GPT by following our Twitter accounts. Engage with the developer and the AI's own account for interesting discussions, project updates, and more.

- Developer: Follow @siggravitas for insights into the development process, project updates, and related topics from the creator of Entrepreneur-GPT.

- Entrepreneur-GPT: Join the conversation with the AI itself by following @En_GPT. Share your experiences, discuss the AI's outputs, and engage with the growing community of users.

We look forward to connecting with you and hearing your thoughts, ideas, and experiences with Auto-GPT. Join us on Twitter and let's explore the future of AI together!